#include <PLearner.h>

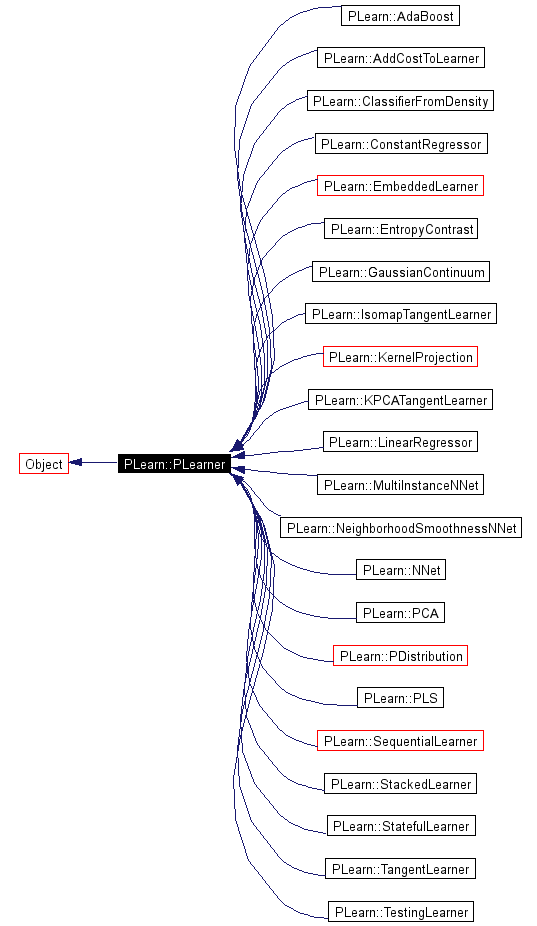

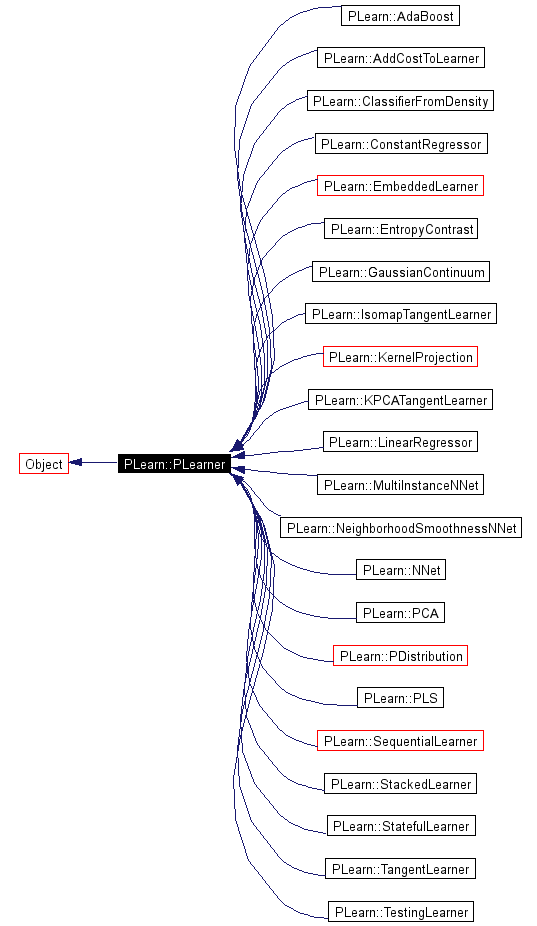

Inheritance diagram for PLearn::PLearner:

Public Member Functions | |

| PLearner () | |

| virtual | ~PLearner () |

| virtual void | setTrainingSet (VMat training_set, bool call_forget=true) |

| Declares the train_set Then calls build() and forget() if necessary Note: You shouldn't have to overload this in subclasses, except in maybe to forward the call to an underlying learner. | |

| VMat | getTrainingSet () const |

| Returns the current train_set. | |

| virtual void | setValidationSet (VMat validset) |

| Set the validation set (optionally) for learners that are able to use it directly. | |

| VMat | getValidationSet () const |

| Returns the current validation set. | |

| virtual void | setTrainStatsCollector (PP< VecStatsCollector > statscol) |

| Sets the statistics collector whose update() method will be called during training. | |

| PP< VecStatsCollector > | getTrainStatsCollector () |

| Returns the train stats collector. | |

| virtual void | setExperimentDirectory (const string &the_expdir) |

| The experiment directory is the directory in which files related to this model are to be saved. | |

| string | getExperimentDirectory () const |

| This returns the currently set expdir (see setExperimentDirectory). | |

| virtual int | inputsize () const |

| Default returns train_set->inputsize(). | |

| virtual int | targetsize () const |

| Default returns train_set->targetsize(). | |

| virtual int | outputsize () const =0 |

| SUBCLASS WRITING: overload this so that it returns the size of this learner's output, as a function of its inputsize(), targetsize() and set options. | |

| virtual void | build () |

| **** SUBCLASS WRITING: **** This method should be redefined in subclasses, to just call inherited::build() and then build_() | |

| virtual void | forget ()=0 |

| (Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) And sets 'stage' back to 0 (this is the stage of a fresh learner!) | |

| virtual void | train ()=0 |

| The role of the train method is to bring the learner up to stage==nstages, updating the stats with training costs measured on-line in the process. | |

| virtual void | computeOutput (const Vec &input, Vec &output) const =0 |

| *** SUBCLASS WRITING: *** This should be defined in subclasses to compute the output from the input | |

| virtual void | computeCostsFromOutputs (const Vec &input, const Vec &output, const Vec &target, Vec &costs) const =0 |

| *** SUBCLASS WRITING: *** This should be defined in subclasses to compute the weighted costs from already computed output. | |

| virtual void | computeOutputAndCosts (const Vec &input, const Vec &target, Vec &output, Vec &costs) const |

| Default calls computeOutput and computeCostsFromOutputs You may overload this if you have a more efficient way to compute both output and weighted costs at the same time. | |

| virtual void | computeCostsOnly (const Vec &input, const Vec &target, Vec &costs) const |

| Default calls computeOutputAndCosts This may be overloaded if there is a more efficient way to compute the costs directly, without computing the whole output vector. | |

| virtual void | use (VMat testset, VMat outputs) const |

| Computes outputs for the input part of testset. | |

| virtual void | useOnTrain (Mat &outputs) const |

| Compute the output on the training set of the learner, and save the result in the provided matrix. | |

| virtual void | test (VMat testset, PP< VecStatsCollector > test_stats, VMat testoutputs=0, VMat testcosts=0) const |

| Performs test on testset, updating test cost statistics, and optionally filling testoutputs and testcosts The default version repeatedly calls computeOutputAndCosts or computeCostsOnly. | |

| virtual TVec< string > | getTestCostNames () const =0 |

| *** SUBCLASS WRITING: *** This should return the names of the costs computed by computeCostsFromOutpus | |

| virtual TVec< string > | getTrainCostNames () const =0 |

| *** SUBCLASS WRITING: *** This should return the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats | |

| virtual int | nTestCosts () const |

| Caches getTestCostNames().size() in an internal variable the first time it is called, and then returns the content of this variable. | |

| virtual int | nTrainCosts () const |

| Caches getTrainCostNames().size() in an internal variable the first time it is called, and then returns the content of this variable. | |

| int | getTestCostIndex (const string &costname) const |

| returns the index of the given cost in the vector of testcosts calls PLERROR (throws a PLearnException) if requested cost is not found. | |

| int | getTrainCostIndex (const string &costname) const |

| returns the index of the given cost in the vector of traincosts (objectives) calls PLERROR (throws a PLearnException) if requested cost is not found. | |

| virtual void | resetInternalState () |

| If any, reset the internal state Default: do nothing. | |

| virtual bool | isStatefulLearner () const |

| Does this PLearner has an internal state? Default: false. | |

| PLEARN_DECLARE_ABSTRACT_OBJECT (PLearner) | |

| Does the necessary operations to transform a shallow copy (this) into a deep copy by deep-copying all the members that need to be. | |

| virtual void | matlabSave (const string &matlab_subdir) |

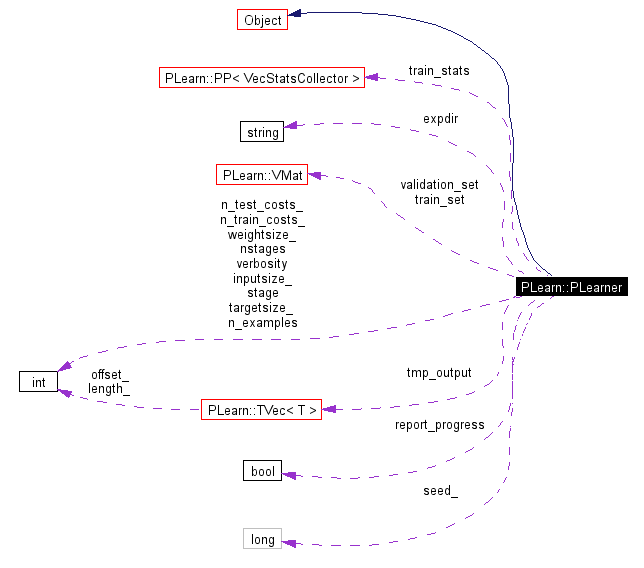

Public Attributes | |

| string | expdir |

| Path of the directory associated with this learner, in which it should save any file it wishes to create. | |

| long | seed_ |

| the seed used for the random number generator in initializing the learner (see forget() method). | |

| int | stage |

| 0 means untrained, n often means after n epochs or optimization steps, etc.. | |

| int | nstages |

| The meaning of 'stage' is learner-dependent, but for learners whose < training is incremental (such as involving incremental optimization), < it is typically synonym with the number of 'epochs', i.e. | |

| bool | report_progress |

| should progress in learning and testing be reported in a ProgressBar | |

| int | verbosity |

Protected Member Functions | |

| virtual void | build_from_train_set () |

| Building part of the PLearner that needs the train_set. | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

Static Protected Member Functions | |

| void | declareOptions (OptionList &ol) |

| redefine this in subclasses: call declareOption(...) for each option, and then call inherited::declareOptions(options) ( see the declareOption function further down) | |

Protected Attributes | |

| VMat | train_set |

| The training set as set by setTrainingSet. | |

| int | inputsize_ |

| int | targetsize_ |

| int | weightsize_ |

| int | n_examples |

| VMat | validation_set |

| PP< VecStatsCollector > | train_stats |

| The stats_collector responsible for collecting train cost statistics during training. | |

Private Types | |

| typedef Object | inherited |

Private Member Functions | |

| void | build_ () |

Private Attributes | |

| int | n_train_costs_ |

| int | n_test_costs_ |

| Vec | tmp_output |

| Global storage to save memory allocations. | |

Definition at line 61 of file PLearner.h.

|

|

|

Definition at line 50 of file PLearner.cc. |

|

|

Definition at line 208 of file PLearner.cc. |

|

|

|

|

Building part of the PLearner that needs the train_set.

Definition at line 183 of file PLearner.h. |

|

||||||||||||||||||||

|

||||||||||||||||

|

Default calls computeOutputAndCosts This may be overloaded if there is a more efficient way to compute the costs directly, without computing the whole output vector.

Reimplemented in PLearn::GaussianProcessRegressor, PLearn::EmbeddedLearner, PLearn::StatefulLearner, PLearn::SequentialLearner, and PLearn::SequentialModelSelector. Definition at line 255 of file PLearner.cc. References computeOutputAndCosts(), outputsize(), PLearn::TVec< T >::resize(), and tmp_output. Referenced by test(). |

|

||||||||||||

|

*** SUBCLASS WRITING: *** This should be defined in subclasses to compute the output from the input

Implemented in PLearn::AdaBoost, PLearn::ClassifierFromDensity, PLearn::MultiInstanceNNet, PLearn::GaussianProcessRegressor, PLearn::PConditionalDistribution, PLearn::PDistribution, PLearn::AddCostToLearner, PLearn::EmbeddedLearner, PLearn::NeighborhoodSmoothnessNNet, PLearn::NNet, PLearn::SelectInputSubsetLearner, PLearn::StackedLearner, PLearn::StatefulLearner, PLearn::TestingLearner, PLearn::ConstantRegressor, PLearn::LinearRegressor, PLearn::PLS, PLearn::SequentialLearner, PLearn::SequentialModelSelector, PLearn::EntropyContrast, PLearn::GaussianContinuum, PLearn::IsomapTangentLearner, PLearn::KernelProjection, PLearn::KPCATangentLearner, PLearn::PCA, and PLearn::TangentLearner. Referenced by computeOutputAndCosts(), and use(). |

|

||||||||||||||||||||

|

Default calls computeOutput and computeCostsFromOutputs You may overload this if you have a more efficient way to compute both output and weighted costs at the same time.

Reimplemented in PLearn::MultiInstanceNNet, PLearn::GaussianProcessRegressor, PLearn::EmbeddedLearner, PLearn::NeighborhoodSmoothnessNNet, PLearn::NNet, PLearn::SelectInputSubsetLearner, PLearn::StatefulLearner, PLearn::SequentialLearner, and PLearn::SequentialModelSelector. Definition at line 248 of file PLearner.cc. References computeCostsFromOutputs(), and computeOutput(). Referenced by computeCostsOnly(), and test(). |

|

|

|

(Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) And sets 'stage' back to 0 (this is the stage of a fresh learner!) *** SUBCLASS WRITING: *** A typical forget() method should do the following:

Implemented in PLearn::AdaBoost, PLearn::ClassifierFromDensity, PLearn::MultiInstanceNNet, PLearn::ConditionalDensityNet, PLearn::GaussianDistribution, PLearn::GaussianProcessRegressor, PLearn::GaussMix, PLearn::PDistribution, PLearn::AddCostToLearner, PLearn::EmbeddedLearner, PLearn::NeighborhoodSmoothnessNNet, PLearn::NNet, PLearn::StackedLearner, PLearn::StatefulLearner, PLearn::TestingLearner, PLearn::ConstantRegressor, PLearn::LinearRegressor, PLearn::PLS, PLearn::EmbeddedSequentialLearner, PLearn::MovingAverage, PLearn::SequentialLearner, PLearn::SequentialModelSelector, PLearn::EntropyContrast, PLearn::GaussianContinuum, PLearn::Isomap, PLearn::IsomapTangentLearner, PLearn::KernelPCA, PLearn::KernelProjection, PLearn::KPCATangentLearner, PLearn::LLE, PLearn::PCA, PLearn::SpectralClustering, and PLearn::TangentLearner. Referenced by setTrainingSet(). |

|

|

This returns the currently set expdir (see setExperimentDirectory).

Definition at line 162 of file PLearner.h. References expdir. Referenced by PLearn::SequentialModelSelector::matlabSave(), PLearn::SequentialLearner::matlabSave(), and PLearn::ClassifierFromDensity::train(). |

|

|

returns the index of the given cost in the vector of testcosts calls PLERROR (throws a PLearnException) if requested cost is not found.

Reimplemented in PLearn::GaussianProcessRegressor. Definition at line 226 of file PLearner.cc. References getTestCostNames(), PLearn::TVec< T >::length(), PLERROR, and PLearn::tostring(). |

|

|

*** SUBCLASS WRITING: *** This should return the names of the costs computed by computeCostsFromOutpus

Implemented in PLearn::AdaBoost, PLearn::ClassifierFromDensity, PLearn::MultiInstanceNNet, PLearn::GaussianProcessRegressor, PLearn::PDistribution, PLearn::AddCostToLearner, PLearn::EmbeddedLearner, PLearn::NeighborhoodSmoothnessNNet, PLearn::NNet, PLearn::StackedLearner, PLearn::TestingLearner, PLearn::ConstantRegressor, PLearn::LinearRegressor, PLearn::PLS, PLearn::EmbeddedSequentialLearner, PLearn::MovingAverage, PLearn::SequentialModelSelector, PLearn::EntropyContrast, PLearn::GaussianContinuum, PLearn::IsomapTangentLearner, PLearn::KernelProjection, PLearn::KPCATangentLearner, PLearn::PCA, and PLearn::TangentLearner. Referenced by getTestCostIndex(), PLearn::SequentialLearner::matlabSave(), PLearn::SequentialLearner::nTestCosts(), and nTestCosts(). |

|

|

returns the index of the given cost in the vector of traincosts (objectives) calls PLERROR (throws a PLearnException) if requested cost is not found.

Reimplemented in PLearn::GaussianProcessRegressor. Definition at line 237 of file PLearner.cc. References getTrainCostNames(), PLearn::TVec< T >::length(), PLERROR, and PLearn::tostring(). |

|

|

*** SUBCLASS WRITING: *** This should return the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats

Implemented in PLearn::AdaBoost, PLearn::ClassifierFromDensity, PLearn::MultiInstanceNNet, PLearn::ConditionalDensityNet, PLearn::GaussianProcessRegressor, PLearn::PDistribution, PLearn::AddCostToLearner, PLearn::EmbeddedLearner, PLearn::NeighborhoodSmoothnessNNet, PLearn::NNet, PLearn::StackedLearner, PLearn::TestingLearner, PLearn::ConstantRegressor, PLearn::LinearRegressor, PLearn::PLS, PLearn::EmbeddedSequentialLearner, PLearn::MovingAverage, PLearn::SequentialModelSelector, PLearn::EntropyContrast, PLearn::GaussianContinuum, PLearn::IsomapTangentLearner, PLearn::KernelProjection, PLearn::KPCATangentLearner, PLearn::PCA, and PLearn::TangentLearner. Referenced by getTrainCostIndex(), and nTrainCosts(). |

|

|

Returns the current train_set.

Definition at line 138 of file PLearner.h. References train_set. Referenced by PLearn::GaussianDistribution::train(). |

|

|

Returns the train stats collector.

Definition at line 152 of file PLearner.h. References train_stats. |

|

|

Returns the current validation set.

Definition at line 144 of file PLearner.h. References validation_set. |

|

|

|

Does this PLearner has an internal state? Default: false.

Reimplemented in PLearn::StatefulLearner. Definition at line 362 of file PLearner.cc. |

|

|

Does the necessary operations to transform a shallow copy (this) into a deep copy by deep-copying all the members that need to be. Typical implementation: void CLASS_OF_THIS::makeDeepCopyFromShallowCopy(CopiesMap& copies) { SUPERCLASS_OF_THIS::makeDeepCopyFromShallowCopy(copies); member_ptr = member_ptr->deepCopy(copies); member_smartptr = member_smartptr->deepCopy(copies); member_mat.makeDeepCopyFromShallowCopy(copies); member_vec.makeDeepCopyFromShallowCopy(copies); ... } Reimplemented from PLearn::Object. Reimplemented in PLearn::MultiInstanceNNet, PLearn::GaussianDistribution, PLearn::NeighborhoodSmoothnessNNet, PLearn::NNet, PLearn::EmbeddedSequentialLearner, PLearn::SequentialLearner, and PLearn::SequentialModelSelector. Definition at line 68 of file PLearner.cc. References PLearn::CopiesMap, PLearn::deepCopyField(), tmp_output, and train_stats. |

|

|

Leads the PLearner to save some data in a matlab 'readable' format. The data will be saved in expdir/matlab_subdir through the global matlabSave function (MatIO.h). Reimplemented in PLearn::SequentialLearner, and PLearn::SequentialModelSelector. Definition at line 350 of file PLearner.h. |

|

|

Caches getTestCostNames().size() in an internal variable the first time it is called, and then returns the content of this variable.

Reimplemented in PLearn::GaussianProcessRegressor, and PLearn::SequentialLearner. Definition at line 212 of file PLearner.cc. References getTestCostNames(), n_test_costs_, and PLearn::TVec< string >::size(). Referenced by PLearn::StatefulLearner::computeOutput(), and test(). |

|

|

Caches getTrainCostNames().size() in an internal variable the first time it is called, and then returns the content of this variable.

Reimplemented in PLearn::GaussianProcessRegressor. Definition at line 219 of file PLearner.cc. References getTrainCostNames(), n_train_costs_, and PLearn::TVec< string >::size(). |

|

|

SUBCLASS WRITING: overload this so that it returns the size of this learner's output, as a function of its inputsize(), targetsize() and set options.

Implemented in PLearn::AdaBoost, PLearn::ClassifierFromDensity, PLearn::MultiInstanceNNet, PLearn::GaussianProcessRegressor, PLearn::PDistribution, PLearn::AddCostToLearner, PLearn::EmbeddedLearner, PLearn::NeighborhoodSmoothnessNNet, PLearn::NNet, PLearn::StackedLearner, PLearn::TestingLearner, PLearn::ConstantRegressor, PLearn::LinearRegressor, PLearn::PLS, PLearn::SequentialLearner, PLearn::EntropyContrast, PLearn::GaussianContinuum, PLearn::IsomapTangentLearner, PLearn::KernelProjection, PLearn::KPCATangentLearner, PLearn::PCA, and PLearn::TangentLearner. Referenced by PLearn::StatefulLearner::computeCostsOnly(), computeCostsOnly(), test(), and use(). |

|

|

Does the necessary operations to transform a shallow copy (this) into a deep copy by deep-copying all the members that need to be.

|

|

|

If any, reset the internal state Default: do nothing.

Definition at line 359 of file PLearner.cc. |

|

|

The experiment directory is the directory in which files related to this model are to be saved. If it is an empty string, it is understood to mean that the user doesn't want any file created by this learner. Reimplemented in PLearn::AddCostToLearner, PLearn::TestingLearner, and PLearn::SequentialModelSelector. Definition at line 139 of file PLearner.cc. References PLearn::abspath(), expdir, PLearn::force_mkdir(), and PLERROR. |

|

||||||||||||

|

Declares the train_set Then calls build() and forget() if necessary Note: You shouldn't have to overload this in subclasses, except in maybe to forward the call to an underlying learner.

Reimplemented in PLearn::MultiInstanceNNet, PLearn::PDistribution, PLearn::AddCostToLearner, PLearn::NeighborhoodSmoothnessNNet, PLearn::SelectInputSubsetLearner, PLearn::StackedLearner, PLearn::StatefulLearner, PLearn::TestingLearner, PLearn::SequentialLearner, and PLearn::KernelProjection. Definition at line 151 of file PLearner.cc. References build(), forget(), inputsize_, PLearn::VMat::length(), n_examples, targetsize_, train_set, and weightsize_. Referenced by PLearn::PCA::train(). |

|

|

Sets the statistics collector whose update() method will be called during training. Note: You shouldn't have to overload this in subclasses, except maybe to forward the call to an underlying learner. Reimplemented in PLearn::AddCostToLearner, and PLearn::StackedLearner. Definition at line 174 of file PLearner.cc. References train_stats. |

|

|

Set the validation set (optionally) for learners that are able to use it directly.

Definition at line 170 of file PLearner.cc. References validation_set. |

|

|

Default returns train_set->targetsize().

Reimplemented in PLearn::EmbeddedLearner. Definition at line 185 of file PLearner.cc. References PLERROR, and targetsize_. Referenced by PLearn::NNet::build_(), PLearn::NeighborhoodSmoothnessNNet::build_(), PLearn::MultiInstanceNNet::build_(), PLearn::StatefulLearner::computeOutput(), PLearn::LinearRegressor::outputsize(), PLearn::ConstantRegressor::outputsize(), PLearn::MovingAverage::test(), PLearn::MovingAverage::train(), PLearn::LinearRegressor::train(), PLearn::HistogramDistribution::train(), PLearn::GaussianProcessRegressor::train(), PLearn::EmbeddedSequentialLearner::train(), PLearn::ConstantRegressor::train(), PLearn::ConditionalDensityNet::train(), PLearn::ClassifierFromDensity::train(), and PLearn::AdaBoost::train(). |

|

||||||||||||||||||||

|

Performs test on testset, updating test cost statistics, and optionally filling testoutputs and testcosts The default version repeatedly calls computeOutputAndCosts or computeCostsOnly.

Reimplemented in PLearn::EmbeddedLearner, PLearn::EmbeddedSequentialLearner, PLearn::MovingAverage, PLearn::SequentialLearner, and PLearn::SequentialModelSelector. Definition at line 302 of file PLearner.cc. References computeCostsOnly(), computeOutputAndCosts(), PLearn::TVec< T >::fill(), PLearn::VMat::getExample(), PLearn::VMat::length(), nTestCosts(), outputsize(), report_progress, and PLearn::ProgressBar::update(). |

|

|

The role of the train method is to bring the learner up to stage==nstages, updating the stats with training costs measured on-line in the process. *** SUBCLASS WRITING: *** TYPICAL CODE: static Vec input; // static so we don't reallocate/deallocate memory each time... static Vec target; // (but be careful that static means shared!) input.resize(inputsize()); // the train_set's inputsize() target.resize(targetsize()); // the train_set's targetsize() real weight; if(!train_stats) // make a default stats collector, in case there's none train_stats = new VecStatsCollector(); if(nstages<stage) // asking to revert to a previous stage! forget(); // reset the learner to stage=0 while(stage<nstages) { clear statistics of previous epoch train_stats->forget(); ... train for 1 stage, and update train_stats, using train_set->getSample(input, target, weight); and train_stats->update(train_costs) ++stage; train_stats->finalize(); // finalize statistics for this epoch } Implemented in PLearn::AdaBoost, PLearn::ClassifierFromDensity, PLearn::MultiInstanceNNet, PLearn::ConditionalDensityNet, PLearn::GaussianDistribution, PLearn::GaussianProcessRegressor, PLearn::GaussMix, PLearn::HistogramDistribution, PLearn::ManifoldParzen2, PLearn::PDistribution, PLearn::AddCostToLearner, PLearn::EmbeddedLearner, PLearn::NeighborhoodSmoothnessNNet, PLearn::NNet, PLearn::StackedLearner, PLearn::TestingLearner, PLearn::ConstantRegressor, PLearn::LinearRegressor, PLearn::PLS, PLearn::EmbeddedSequentialLearner, PLearn::MovingAverage, PLearn::SequentialLearner, PLearn::SequentialModelSelector, PLearn::EntropyContrast, PLearn::GaussianContinuum, PLearn::IsomapTangentLearner, PLearn::KernelProjection, PLearn::KPCATangentLearner, PLearn::PCA, and PLearn::TangentLearner. |

|

||||||||||||

|

Computes outputs for the input part of testset. testset is not required to contain a target part. The default version repeatedly calls computeOutput Definition at line 265 of file PLearner.cc. References computeOutput(), PLearn::VMat::getExample(), PLearn::VMat::length(), outputsize(), report_progress, and PLearn::ProgressBar::update(). |

|

|

Compute the output on the training set of the learner, and save the result in the provided matrix.

Definition at line 293 of file PLearner.cc. References PLearn::Mat, PLWARNING, train_set, and PLearn::use(). |

|

|

Path of the directory associated with this learner, in which it should save any file it wishes to create. The directory will be created if it does not already exist. If expdir is the empty string (the default), then the learner should not create *any* file. Note that, anyway, most file creation and reporting are handled at the level of the PTester class rather than at the learner's. Definition at line 85 of file PLearner.h. Referenced by build_(), getExperimentDirectory(), and setExperimentDirectory(). |

|

|

Definition at line 119 of file PLearner.h. Referenced by inputsize(), and setTrainingSet(). |

|

|

Reimplemented in PLearn::KernelProjection. Definition at line 119 of file PLearner.h. Referenced by setTrainingSet(). |

|

|

Definition at line 69 of file PLearner.h. Referenced by nTestCosts(). |

|

|

Definition at line 68 of file PLearner.h. Referenced by nTrainCosts(). |

|

|

The meaning of 'stage' is learner-dependent, but for learners whose < training is incremental (such as involving incremental optimization), < it is typically synonym with the number of 'epochs', i.e. the number < of passages of the optimization process through the whole training set, < since the last fresh initialisation. Definition at line 94 of file PLearner.h. |

|

|

should progress in learning and testing be reported in a ProgressBar

Definition at line 101 of file PLearner.h. |

|

|

the seed used for the random number generator in initializing the learner (see forget() method).

Definition at line 87 of file PLearner.h. |

|

|

0 means untrained, n often means after n epochs or optimization steps, etc.. < The true meaning is learner-dependant. < You should never modify this option directly! < It is the role of forget() to bring it back to 0, < and the role of train() to bring it up to 'nstages'... Definition at line 88 of file PLearner.h. |

|

|

Definition at line 119 of file PLearner.h. Referenced by setTrainingSet(), and targetsize(). |

|

|

Global storage to save memory allocations.

Definition at line 72 of file PLearner.h. Referenced by computeCostsOnly(), and makeDeepCopyFromShallowCopy(). |

|

|

The training set as set by setTrainingSet. Data-sets are seen as matrices whose columns or fields are layed out as follows: a number of input fields, followed by (optional) target fields, followed by a (optional) weight field (to weigh each example). The sizes of those areas are given by the VMatrix options inputsize targetsize, and weightsize, which are typically used by the learner upon building. Definition at line 116 of file PLearner.h. Referenced by getTrainingSet(), setTrainingSet(), and useOnTrain(). |

|

|

The stats_collector responsible for collecting train cost statistics during training. This is typically set by some external training harness that wants to collect some stats. Definition at line 125 of file PLearner.h. Referenced by getTrainStatsCollector(), makeDeepCopyFromShallowCopy(), and setTrainStatsCollector(). |

|

|

Reimplemented in PLearn::EntropyContrast. Definition at line 121 of file PLearner.h. Referenced by getValidationSet(), and setValidationSet(). |

|

|

Definition at line 102 of file PLearner.h. |

|

|

Definition at line 119 of file PLearner.h. Referenced by setTrainingSet(). |

1.3.7

1.3.7