#include <GaussianProcessRegressor.h>

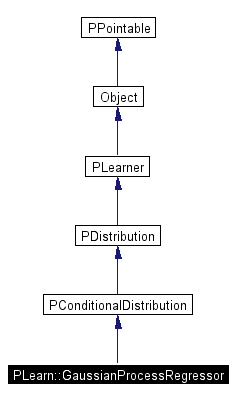

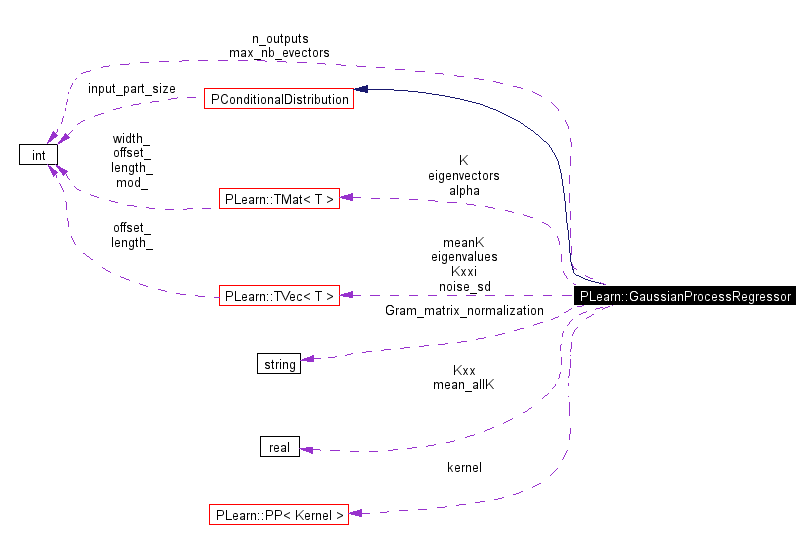

Inheritance diagram for PLearn::GaussianProcessRegressor:

Public Types | |

| typedef PConditionalDistribution | inherited |

Public Member Functions | |

| GaussianProcessRegressor () | |

| virtual | ~GaussianProcessRegressor () |

| virtual void | makeDeepCopyFromShallowCopy (map< const void *, void * > &copies) |

| Transforms a shallow copy into a deep copy. | |

| virtual void | setInput (const Vec &input) |

| Set the input part before using the inherited methods. | |

| virtual void | setInput_const (const Vec &input) const |

| virtual double | log_density (const Vec &x) const |

| return log of probability density log(p(x)) | |

| virtual Vec | expectation () const |

| return E[X] | |

| virtual void | expectation (Vec expected_y) const |

| return E[X] | |

| virtual Mat | variance () const |

| return Var[X] | |

| virtual void | variance (Vec diag_variances) const |

| virtual void | build () |

| Simply calls inherited::build() then build_(). | |

| virtual void | forget () |

| (Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) And sets 'stage' back to 0 (this is the stage of a fresh learner!) | |

| virtual int | outputsize () const |

| Returned value depends on outputs_def. | |

| virtual void | train () |

| The role of the train method is to bring the learner up to stage==nstages, updating the stats with training costs measured on-line in the process. | |

| virtual void | computeOutput (const Vec &input, Vec &output) const |

| Produce outputs according to what is specified in outputs_def. | |

| virtual void | computeCostsFromOutputs (const Vec &input, const Vec &output, const Vec &target, Vec &costs) const |

| This should be defined in subclasses to compute the weighted costs from already computed output. | |

| virtual void | computeOutputAndCosts (const Vec &input, const Vec &target, Vec &output, Vec &costs) const |

| Default calls computeOutput and computeCostsFromOutputs You may overload this if you have a more efficient way to compute both output and weighted costs at the same time. | |

| virtual void | computeCostsOnly (const Vec &input, const Vec &target, Vec &costs) const |

| Default calls computeOutputAndCosts This may be overloaded if there is a more efficient way to compute the costs directly, without computing the whole output vector. | |

| virtual TVec< string > | getTestCostNames () const |

| This should return the names of the costs computed by computeCostsFromOutpus. | |

| virtual TVec< string > | getTrainCostNames () const |

| This should return the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats. | |

| virtual int | nTestCosts () const |

| Caches getTestCostNames().size() in an internal variable the first time it is called, and then returns the content of this variable. | |

| virtual int | nTrainCosts () const |

| Caches getTrainCostNames().size() in an internal variable the first time it is called, and then returns the content of this variable. | |

| int | getTestCostIndex (const string &costname) const |

| returns the index of the given cost in the vector of testcosts (returns -1 if not found) | |

| int | getTrainCostIndex (const string &costname) const |

| returns the index of the given cost in the vector of traincosts (objectives) (returns -1 if not found) | |

| PLEARN_DECLARE_OBJECT (GaussianProcessRegressor) | |

Public Attributes | |

| PP< Kernel > | kernel |

| int | n_outputs |

| Vec | noise_sd |

| string | Gram_matrix_normalization |

| int | max_nb_evectors |

| Mat | alpha |

| Vec | Kxxi |

| real | Kxx |

| Mat | K |

| Mat | eigenvectors |

| Vec | eigenvalues |

| Vec | meanK |

| real | mean_allK |

Protected Member Functions | |

| void | inverseCovTimesVec (real sigma, Vec v, Vec Cinv_v) const |

| real | QFormInverse (real sigma2, Vec u) const |

| real | BayesianCost () |

| to be used for hyper-parameter selection, this is the negative log-likelihood of the training data. | |

Static Protected Member Functions | |

| void | declareOptions (OptionList &ol) |

| Declares this class' options. | |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

prediction = E[E[y|x]|training_set] = E[y|x,training_set] prediction[j] = sum_i alpha_{ji} K(x,x_i) = (K(x,x_i))_i' inv(K+sigma^2[j] I) targets

Var[y[j]|x,training_set] = Var[E[y[j]|x]|training_set] + E[Var[y[j]|x]|training_set] where Var[E[y[j]|x]|training_set] = K(x,x)- (K(x,x_i))_i' inv(K+sigma^2[j]) (K(x,x_i))_i and E[Var[y[j]|x]|training_set] = Var[y[j]|x] = sigma^2[j] = noise

costs: MSE = sum_j (y[j] - prediction[j])^2 NLL = sum_j log Normal(y[j];prediction[j],Var[y[j]|x,training_set])

Definition at line 72 of file GaussianProcessRegressor.h.

|

|

Reimplemented from PLearn::PConditionalDistribution. Definition at line 76 of file GaussianProcessRegressor.h. Referenced by GaussianProcessRegressor(). |

|

|

Definition at line 49 of file GaussianProcessRegressor.cc. References inherited. |

|

|

Definition at line 180 of file GaussianProcessRegressor.cc. |

|

|

to be used for hyper-parameter selection, this is the negative log-likelihood of the training data.

compute the "training cost" = negative log-likelihood of the training data = 0.5*sum_i (log det(K+sigma[i]^2 I) + y' inv(K+sigma[i]^2 I) y + l log(2 pi)) Definition at line 423 of file GaussianProcessRegressor.cc. References eigenvalues, eigenvectors, K, PLearn::TMat< T >::length(), Log2Pi, n_outputs, noise_sd, and PLearn::safeflog(). |

|

|

Simply calls inherited::build() then build_().

Reimplemented from PLearn::PConditionalDistribution. Definition at line 169 of file GaussianProcessRegressor.cc. References build_(). |

|

|

This does the actual building.

Reimplemented from PLearn::PConditionalDistribution. Definition at line 139 of file GaussianProcessRegressor.cc. References PLearn::abspath(), alpha, PLearn::force_mkdir(), K, Kxxi, PLearn::VMat::length(), meanK, n_outputs, outputsize(), PLERROR, PLearn::TVec< T >::resize(), and PLearn::TMat< T >::resize(). Referenced by build(). |

|

||||||||||||||||||||

|

This should be defined in subclasses to compute the weighted costs from already computed output. NOTE: In exotic cases, the cost may also depend on some info in the input, that's why the method also gets so see it. Reimplemented from PLearn::PDistribution. Definition at line 294 of file GaussianProcessRegressor.cc. References expectation(), PLearn::gauss_log_density_var(), n_outputs, noise_sd, PLearn::TVec< T >::subVec(), PLearn::var(), and variance(). Referenced by computeOutputAndCosts(). |

|

||||||||||||||||

|

Default calls computeOutputAndCosts This may be overloaded if there is a more efficient way to compute the costs directly, without computing the whole output vector.

Reimplemented from PLearn::PLearner. Definition at line 336 of file GaussianProcessRegressor.cc. References computeOutputAndCosts(), outputsize(), and PLearn::TVec< T >::resize(). |

|

||||||||||||

|

Produce outputs according to what is specified in outputs_def.

Reimplemented from PLearn::PConditionalDistribution. Definition at line 264 of file GaussianProcessRegressor.cc. References expectation(), n_outputs, setInput_const(), PLearn::TVec< T >::subVec(), and variance(). Referenced by computeOutputAndCosts(). |

|

||||||||||||||||||||

|

Default calls computeOutput and computeCostsFromOutputs You may overload this if you have a more efficient way to compute both output and weighted costs at the same time.

Reimplemented from PLearn::PLearner. Definition at line 329 of file GaussianProcessRegressor.cc. References computeCostsFromOutputs(), and computeOutput(). Referenced by computeCostsOnly(). |

|

|

Declares this class' options.

Reimplemented from PLearn::PConditionalDistribution. Definition at line 110 of file GaussianProcessRegressor.cc. References PLearn::declareOption(), and PLearn::OptionList. |

|

|

return E[X]

Definition at line 221 of file GaussianProcessRegressor.cc. References alpha, PLearn::dot(), Kxxi, and n_outputs. |

|

|

return E[X]

Definition at line 228 of file GaussianProcessRegressor.cc. References n_outputs, and PLearn::TVec< T >::resize(). Referenced by computeCostsFromOutputs(), and computeOutput(). |

|

|

(Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) And sets 'stage' back to 0 (this is the stage of a fresh learner!)

Reimplemented from PLearn::PDistribution. Definition at line 175 of file GaussianProcessRegressor.cc. |

|

|

returns the index of the given cost in the vector of testcosts (returns -1 if not found)

Reimplemented from PLearn::PLearner. Definition at line 195 of file GaussianProcessRegressor.cc. References getTestCostNames(), and PLearn::TVec< T >::length(). |

|

|

This should return the names of the costs computed by computeCostsFromOutpus.

Reimplemented from PLearn::PDistribution. Definition at line 192 of file GaussianProcessRegressor.cc. References getTrainCostNames(). Referenced by getTestCostIndex(). |

|

|

returns the index of the given cost in the vector of traincosts (objectives) (returns -1 if not found)

Reimplemented from PLearn::PLearner. Definition at line 204 of file GaussianProcessRegressor.cc. References getTrainCostNames(), and PLearn::TVec< T >::length(). |

|

|

This should return the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats.

Reimplemented from PLearn::PDistribution. Definition at line 184 of file GaussianProcessRegressor.cc. Referenced by getTestCostNames(), and getTrainCostIndex(). |

|

||||||||||||||||

|

Definition at line 456 of file GaussianProcessRegressor.cc. References PLearn::dot(), eigenvalues, eigenvectors, PLearn::TMat< T >::length(), PLearn::multiply(), and PLearn::multiplyAdd(). Referenced by train(). |

|

|

return log of probability density log(p(x))

Reimplemented from PLearn::PDistribution. Definition at line 214 of file GaussianProcessRegressor.cc. References PLERROR. |

|

|

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::PConditionalDistribution. Definition at line 56 of file GaussianProcessRegressor.cc. References alpha, PLearn::deepCopyField(), eigenvalues, eigenvectors, K, kernel, Kxx, Kxxi, meanK, and noise_sd. |

|

|

Caches getTestCostNames().size() in an internal variable the first time it is called, and then returns the content of this variable.

Reimplemented from PLearn::PLearner. Definition at line 172 of file GaussianProcessRegressor.h. |

|

|

Caches getTrainCostNames().size() in an internal variable the first time it is called, and then returns the content of this variable.

Reimplemented from PLearn::PLearner. Definition at line 174 of file GaussianProcessRegressor.h. |

|

|

Returned value depends on outputs_def.

Reimplemented from PLearn::PDistribution. Definition at line 158 of file GaussianProcessRegressor.cc. References n_outputs. Referenced by build_(), and computeCostsOnly(). |

|

|

|

|

||||||||||||

|

Definition at line 469 of file GaussianProcessRegressor.cc. References PLearn::dot(), eigenvalues, eigenvectors, PLearn::TMat< T >::length(), and PLearn::norm(). Referenced by variance(). |

|

|

Set the input part before using the inherited methods.

Definition at line 75 of file GaussianProcessRegressor.cc. References setInput_const(). |

|

|

Definition at line 79 of file GaussianProcessRegressor.cc. References Gram_matrix_normalization, kernel, Kxx, Kxxi, PLearn::TVec< T >::length(), PLearn::mean(), mean_allK, meanK, and PLearn::sqrt(). Referenced by computeOutput(), and setInput(). |

|

|

The role of the train method is to bring the learner up to stage==nstages, updating the stats with training costs measured on-line in the process.

Reimplemented from PLearn::PDistribution. Definition at line 344 of file GaussianProcessRegressor.cc. References alpha, PLearn::columnMean(), eigenvalues, PLearn::eigenVecOfSymmMat(), eigenvectors, Gram_matrix_normalization, PLearn::PLearner::inputsize(), inverseCovTimesVec(), K, kernel, PLearn::TMat< T >::length(), max_nb_evectors, PLearn::mean(), mean_allK, meanK, PLearn::TMat< T >::mod(), n_outputs, noise_sd, PLearn::sqrt(), PLearn::VMat::subMatColumns(), PLearn::PLearner::targetsize(), PLearn::VMat::toMat(), and PLearn::TMat< T >::toVec(). |

|

|

Definition at line 236 of file GaussianProcessRegressor.cc. References Kxx, Kxxi, n_outputs, noise_sd, and QFormInverse(). |

|

|

return Var[X]

Definition at line 247 of file GaussianProcessRegressor.cc. References Kxx, Kxxi, PLearn::Var::length(), n_outputs, noise_sd, QFormInverse(), and PLearn::var(). Referenced by computeCostsFromOutputs(), and computeOutput(). |

|

|

Definition at line 96 of file GaussianProcessRegressor.h. Referenced by build_(), expectation(), makeDeepCopyFromShallowCopy(), and train(). |

|

|

Definition at line 101 of file GaussianProcessRegressor.h. Referenced by BayesianCost(), inverseCovTimesVec(), makeDeepCopyFromShallowCopy(), QFormInverse(), and train(). |

|

|

Definition at line 100 of file GaussianProcessRegressor.h. Referenced by BayesianCost(), inverseCovTimesVec(), makeDeepCopyFromShallowCopy(), QFormInverse(), and train(). |

|

|

Definition at line 82 of file GaussianProcessRegressor.h. Referenced by setInput_const(), and train(). |

|

|

Definition at line 99 of file GaussianProcessRegressor.h. Referenced by BayesianCost(), build_(), makeDeepCopyFromShallowCopy(), and train(). |

|

|

Definition at line 79 of file GaussianProcessRegressor.h. Referenced by makeDeepCopyFromShallowCopy(), setInput_const(), and train(). |

|

|

Definition at line 98 of file GaussianProcessRegressor.h. Referenced by makeDeepCopyFromShallowCopy(), setInput_const(), and variance(). |

|

|

Definition at line 97 of file GaussianProcessRegressor.h. Referenced by build_(), expectation(), makeDeepCopyFromShallowCopy(), setInput_const(), and variance(). |

|

|

Definition at line 91 of file GaussianProcessRegressor.h. Referenced by train(). |

|

|

Definition at line 103 of file GaussianProcessRegressor.h. Referenced by setInput_const(), and train(). |

|

|

Definition at line 102 of file GaussianProcessRegressor.h. Referenced by build_(), makeDeepCopyFromShallowCopy(), setInput_const(), and train(). |

|

|

Definition at line 80 of file GaussianProcessRegressor.h. Referenced by BayesianCost(), build_(), computeCostsFromOutputs(), computeOutput(), expectation(), outputsize(), train(), and variance(). |

|

|

Definition at line 81 of file GaussianProcessRegressor.h. Referenced by BayesianCost(), computeCostsFromOutputs(), makeDeepCopyFromShallowCopy(), train(), and variance(). |

1.3.7

1.3.7