#include <ConjGradientOptimizer.h>

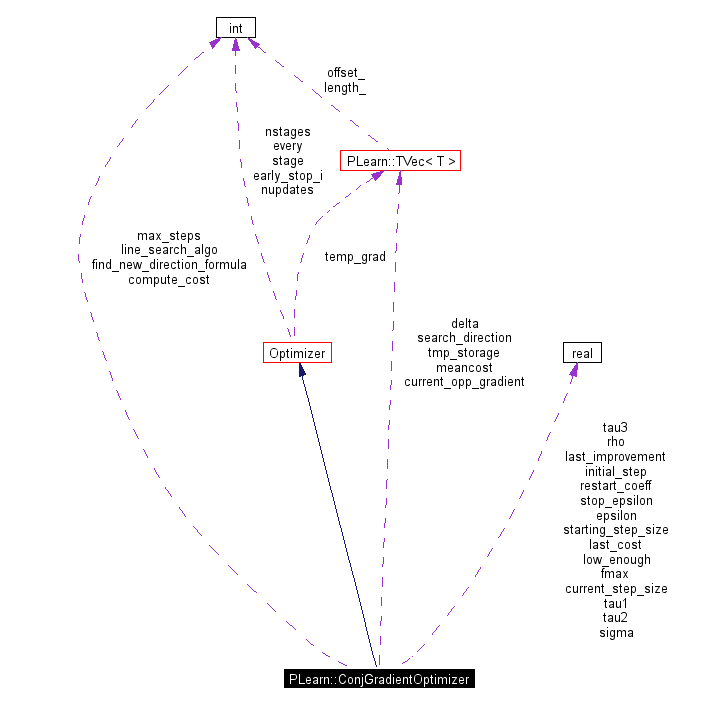

Inheritance diagram for PLearn::ConjGradientOptimizer:

Public Member Functions | |

| ConjGradientOptimizer (real the_starting_step_size=0.01, real the_restart_coeff=0.2, real the_epsilon=0.01, real the_sigma=0.01, real the_rho=0.005, real the_fmax=-1e8, real the_stop_epsilon=0.0001, real the_tau1=9, real the_tau2=0.1, real the_tau3=0.5, int n_updates=1, const string &filename="", int every_iterations=1) | |

| ConjGradientOptimizer (VarArray the_params, Var the_cost, real the_starting_step_size=0.01, real the_restart_coeff=0.2, real the_epsilon=0.01, real the_sigma=0.01, real the_rho=0.005, real the_fmax=0, real the_stop_epsilon=0.0001, real the_tau1=9, real the_tau2=0.1, real the_tau3=0.5, int n_updates=1, const string &filename="", int every_iterations=1) | |

| ConjGradientOptimizer (VarArray the_params, Var the_cost, VarArray the_update_for_measure, real the_starting_step_size=0.01, real the_restart_coeff=0.2, real the_epsilon=0.01, real the_sigma=0.01, real the_rho=0.005, real the_fmax=0, real the_stop_epsilon=0.0001, real the_tau1=9, real the_tau2=0.1, real the_tau3=0.5, int n_updates=1, const string &filename="", int every_iterations=1) | |

| PLEARN_DECLARE_OBJECT (ConjGradientOptimizer) | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| virtual void | build () |

| Should call simply inherited::build(), then this class's build_(). | |

| virtual real | optimize () |

| sub-classes should define this, which is the main method | |

| virtual bool | optimizeN (VecStatsCollector &stat_coll) |

| sub-classes should define this, which is the new main method | |

| virtual void | reset () |

Public Attributes | |

| int | compute_cost |

| If set to 1, will compute and display the mean cost at each epoch. | |

| int | line_search_algo |

| The line search algorithm used 1 : Fletcher line search 2 : GSearch 3 : Newton line search. | |

| int | find_new_direction_formula |

| The formula used to find the new search direction 1 : ConjPOMPD 2 : Dai - Yuan 3 : Fletcher - Reeves 4 : Hestenes - Stiefel 5 : Polak - Ribiere. | |

| real | starting_step_size |

| real | restart_coeff |

| real | epsilon |

| real | sigma |

| real | rho |

| real | fmax |

| real | stop_epsilon |

| real | tau1 |

| real | tau2 |

| real | tau3 |

| int | max_steps |

| real | initial_step |

| real | low_enough |

Protected Member Functions | |

| virtual void | printStep (ostream &ostr, int step, real mean_cost, string sep="\t") |

Static Protected Member Functions | |

| void | declareOptions (OptionList &ol) |

| redefine this in subclasses: call declareOption(...) for each option, and then call inherited::declareOptions(options) ( see the declareOption function further down) | |

Protected Attributes | |

| Vec | meancost |

Private Types | |

| typedef Optimizer | inherited |

Private Member Functions | |

| void | build_ () |

| bool | findDirection () |

| Find the new search direction for the line search algorithm. | |

| bool | lineSearch () |

| Search the minimum in the current search direction Return true iif no improvement was possible (and we can stop here). | |

| void | updateSearchDirection (real gamma) |

| Update the search_direction by search_direction = delta + gamma * search_direction Delta is supposed to be the current opposite gradient Also update current_opp_gradient to be equal to delta. | |

| real | gSearch (void(*grad)(Optimizer *, const Vec &)) |

| real | fletcherSearch (real mu=FLT_MAX) |

| real | newtonSearch (int max_steps, real initial_step, real low_enough) |

Static Private Member Functions | |

| real | conjpomdp (void(*grad)(Optimizer *, const Vec &gradient), ConjGradientOptimizer *opt) |

| real | daiYuan (void(*grad)(Optimizer *, const Vec &), ConjGradientOptimizer *opt) |

| real | fletcherReeves (void(*grad)(Optimizer *, const Vec &), ConjGradientOptimizer *opt) |

| real | hestenesStiefel (void(*grad)(Optimizer *, const Vec &), ConjGradientOptimizer *opt) |

| real | polakRibiere (void(*grad)(Optimizer *, const Vec &), ConjGradientOptimizer *opt) |

| real | computeCostValue (real alpha, ConjGradientOptimizer *opt) |

| real | computeDerivative (real alpha, ConjGradientOptimizer *opt) |

| void | computeCostAndDerivative (real alpha, ConjGradientOptimizer *opt, real &cost, real &derivative) |

| void | cubicInterpol (real f0, real f1, real g0, real g1, real &a, real &b, real &c, real &d) |

| real | daiYuanMain (Vec new_gradient, Vec old_gradient, Vec old_search_direction, Vec tmp_storage) |

| real | findMinWithCubicInterpol (real p1, real p2, real mini, real maxi, real f0, real f1, real g0, real g1) |

| real | findMinWithQuadInterpol (int q, real sum_x, real sum_x_2, real sum_x_3, real sum_x_4, real sum_c_x_2, real sum_g_x, real sum_c_x, real sum_c, real sum_g) |

| real | fletcherSearchMain (real(*f)(real, ConjGradientOptimizer *opt), real(*g)(real, ConjGradientOptimizer *opt), ConjGradientOptimizer *opt, real sigma, real rho, real fmax, real epsilon, real tau1=9, real tau2=0.1, real tau3=0.5, real alpha1=FLT_MAX, real mu=FLT_MAX) |

| real | minCubic (real a, real b, real c, real mini=-FLT_MAX, real maxi=FLT_MAX) |

| real | minQuadratic (real a, real b, real mini=-FLT_MAX, real maxi=FLT_MAX) |

| void | quadraticInterpol (real f0, real f1, real g0, real &a, real &b, real &c) |

Private Attributes | |

| Vec | current_opp_gradient |

| Vec | search_direction |

| Vec | tmp_storage |

| Vec | delta |

| real | last_improvement |

| real | last_cost |

| real | current_step_size |

|

|

Reimplemented from PLearn::Optimizer. Definition at line 62 of file ConjGradientOptimizer.h. Referenced by ConjGradientOptimizer(). |

|

||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

Definition at line 52 of file ConjGradientOptimizer.cc. References inherited. |

|

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

Definition at line 75 of file ConjGradientOptimizer.cc. References PLearn::endl(). |

|

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

Definition at line 99 of file ConjGradientOptimizer.cc. References PLearn::endl(). |

|

|

Should call simply inherited::build(), then this class's build_(). This method should be callable again at later times, after modifying some option fields to change the "architecture" of the object. Reimplemented from PLearn::Optimizer. Definition at line 171 of file ConjGradientOptimizer.h. References build_(). |

|

|

This method should be redefined in subclasses and do the actual building of the object according to previously set option fields. Constructors can just set option fields, and then call build_. This method is NOT virtual, and will typically be called only from three places: a constructor, the public virtual build() method, and possibly the public virtual read method (which calls its parent's read). build_() can assume that it's parent's build_ has already been called. Reimplemented from PLearn::Optimizer. Definition at line 248 of file ConjGradientOptimizer.cc. References current_opp_gradient, delta, PLearn::Var::length(), meancost, PLearn::VarArray::nelems(), PLearn::TVec< T >::resize(), search_direction, and tmp_storage. Referenced by build(). |

|

||||||||||||||||||||

|

||||||||||||

|

Definition at line 288 of file ConjGradientOptimizer.cc. References PLearn::VarArray::copyFrom(), PLearn::VarArray::copyTo(), PLearn::Optimizer::cost, PLearn::VarArray::fprop(), last_cost, PLearn::Optimizer::params, PLearn::Optimizer::proppath, search_direction, tmp_storage, and PLearn::VarArray::update(). Referenced by fletcherSearch(). |

|

||||||||||||

|

Definition at line 305 of file ConjGradientOptimizer.cc. References PLearn::VarArray::copyFrom(), PLearn::VarArray::copyTo(), current_opp_gradient, delta, PLearn::dot(), PLearn::Optimizer::params, search_direction, tmp_storage, and PLearn::VarArray::update(). Referenced by fletcherSearch(). |

|

||||||||||||

|

Definition at line 320 of file ConjGradientOptimizer.cc. References current_opp_gradient, delta, PLearn::dot(), PLearn::TVec< T >::length(), PLearn::pownorm(), search_direction, and tmp_storage. Referenced by findDirection(). |

|

||||||||||||||||||||||||||||||||||||

|

Definition at line 344 of file ConjGradientOptimizer.cc. Referenced by findMinWithCubicInterpol(). |

|

||||||||||||

|

Definition at line 356 of file ConjGradientOptimizer.cc. References current_opp_gradient, delta, PLearn::dot(), PLearn::TVec< T >::length(), PLearn::pownorm(), search_direction, and tmp_storage. Referenced by findDirection(). |

|

||||||||||||||||||||

|

|

|

|

redefine this in subclasses: call declareOption(...) for each option, and then call inherited::declareOptions(options) ( see the declareOption function further down) ex: static void declareOptions(OptionList& ol) { declareOption(ol, "inputsize", &MyObject::inputsize_, OptionBase::buildoption, "the size of the input\n it must be provided"); declareOption(ol, "weights", &MyObject::weights, OptionBase::learntoption, "the learnt model weights"); inherited::declareOptions(ol); } Reimplemented from PLearn::Optimizer. Definition at line 137 of file ConjGradientOptimizer.cc. References PLearn::declareOption(), and PLearn::OptionList. |

|

|

Find the new search direction for the line search algorithm.

Definition at line 370 of file ConjGradientOptimizer.cc. References PLearn::abs(), conjpomdp(), current_opp_gradient, daiYuan(), delta, PLearn::dot(), PLearn::endl(), find_new_direction_formula, fletcherReeves(), hestenesStiefel(), polakRibiere(), PLearn::pownorm(), restart_coeff, and updateSearchDirection(). Referenced by optimize(), and optimizeN(). |

|

||||||||||||||||||||||||||||||||||||

|

Definition at line 420 of file ConjGradientOptimizer.cc. References cubicInterpol(), and minCubic(). Referenced by fletcherSearchMain(). |

|

||||||||||||||||||||||||||||||||||||||||||||

|

Definition at line 467 of file ConjGradientOptimizer.cc. Referenced by newtonSearch(). |

|

||||||||||||

|

Definition at line 507 of file ConjGradientOptimizer.cc. References current_opp_gradient, delta, and PLearn::pownorm(). Referenced by findDirection(). |

|

|

Definition at line 518 of file ConjGradientOptimizer.cc. References computeCostValue(), computeDerivative(), current_step_size, fletcherSearchMain(), fmax, rho, sigma, stop_epsilon, tau1, tau2, and tau3. Referenced by lineSearch(). |

|

||||||||||||||||||||||||||||||||||||||||||||||||||||

|

Definition at line 538 of file ConjGradientOptimizer.cc. References PLearn::abs(), PLearn::Optimizer::cost, PLearn::Optimizer::early_stop, PLearn::endl(), findMinWithCubicInterpol(), and PLearn::min(). Referenced by fletcherSearch(). |

|

|

Definition at line 676 of file ConjGradientOptimizer.cc. References PLearn::VarArray::copyFrom(), PLearn::VarArray::copyTo(), current_step_size, delta, PLearn::dot(), epsilon, search_direction, tmp_storage, and PLearn::VarArray::update(). Referenced by lineSearch(). |

|

||||||||||||

|

Definition at line 727 of file ConjGradientOptimizer.cc. References current_opp_gradient, delta, PLearn::dot(), PLearn::TVec< T >::length(), search_direction, and tmp_storage. Referenced by findDirection(). |

|

|

Search the minimum in the current search direction Return true iif no improvement was possible (and we can stop here).

Definition at line 743 of file ConjGradientOptimizer.cc. References PLearn::endl(), fletcherSearch(), gSearch(), initial_step, line_search_algo, low_enough, max_steps, newtonSearch(), search_direction, and PLearn::VarArray::update(). Referenced by optimize(), and optimizeN(). |

|

|

Does the necessary operations to transform a shallow copy (this) into a deep copy by deep-copying all the members that need to be. Typical implementation: void CLASS_OF_THIS::makeDeepCopyFromShallowCopy(CopiesMap& copies) { SUPERCLASS_OF_THIS::makeDeepCopyFromShallowCopy(copies); member_ptr = member_ptr->deepCopy(copies); member_smartptr = member_smartptr->deepCopy(copies); member_mat.makeDeepCopyFromShallowCopy(copies); member_vec.makeDeepCopyFromShallowCopy(copies); ... } Reimplemented from PLearn::Object. Definition at line 169 of file ConjGradientOptimizer.h. References PLearn::CopiesMap, and makeDeepCopyFromShallowCopy(). Referenced by makeDeepCopyFromShallowCopy(). |

|

||||||||||||||||||||||||

|

Definition at line 771 of file ConjGradientOptimizer.cc. References PLearn::abs(), minQuadratic(), and PLearn::sqrt(). Referenced by findMinWithCubicInterpol(). |

|

||||||||||||||||||||

|

Definition at line 829 of file ConjGradientOptimizer.cc. References PLearn::abs(). Referenced by minCubic(). |

|

||||||||||||||||

|

Definition at line 860 of file ConjGradientOptimizer.cc. References PLearn::abs(), computeCostAndDerivative(), PLearn::endl(), findMinWithQuadInterpol(), and x. Referenced by lineSearch(). |

|

|

sub-classes should define this, which is the main method

Implements PLearn::Optimizer. Definition at line 911 of file ConjGradientOptimizer.cc. References PLearn::TVec< T >::clear(), compute_cost, current_opp_gradient, current_step_size, PLearn::dot(), PLearn::endl(), findDirection(), last_cost, last_improvement, lineSearch(), PLearn::max(), meancost, PLearn::min(), printStep(), search_direction, and stop_epsilon. |

|

|

sub-classes should define this, which is the new main method

Implements PLearn::Optimizer. Definition at line 996 of file ConjGradientOptimizer.cc. References PLearn::TVec< T >::clear(), compute_cost, current_opp_gradient, current_step_size, delta, PLearn::dot(), PLearn::endl(), findDirection(), last_cost, last_improvement, lineSearch(), PLearn::max(), meancost, PLearn::min(), PLERROR, printStep(), search_direction, stop_epsilon, and PLearn::VecStatsCollector::update(). |

|

|

|

|

||||||||||||

|

Definition at line 1055 of file ConjGradientOptimizer.cc. References current_opp_gradient, delta, PLearn::dot(), PLearn::TVec< T >::length(), PLearn::pownorm(), and tmp_storage. Referenced by findDirection(). |

|

||||||||||||||||||||

|

Definition at line 191 of file ConjGradientOptimizer.h. References PLearn::endl(), meancost, and printStep(). Referenced by optimize(), optimizeN(), and printStep(). |

|

||||||||||||||||||||||||||||

|

Definition at line 1071 of file ConjGradientOptimizer.cc. |

|

|

Reimplemented from PLearn::Optimizer. Definition at line 1082 of file ConjGradientOptimizer.cc. References current_step_size, last_improvement, and starting_step_size. |

|

|

Update the search_direction by search_direction = delta + gamma * search_direction Delta is supposed to be the current opposite gradient Also update current_opp_gradient to be equal to delta.

Definition at line 1092 of file ConjGradientOptimizer.cc. References current_opp_gradient, delta, PLearn::TVec< T >::length(), and search_direction. Referenced by findDirection(). |

|

|

If set to 1, will compute and display the mean cost at each epoch.

Definition at line 69 of file ConjGradientOptimizer.h. Referenced by optimize(), and optimizeN(). |

|

|

Definition at line 109 of file ConjGradientOptimizer.h. Referenced by build_(), computeCostAndDerivative(), computeDerivative(), conjpomdp(), daiYuan(), findDirection(), fletcherReeves(), hestenesStiefel(), optimize(), optimizeN(), polakRibiere(), and updateSearchDirection(). |

|

|

Definition at line 115 of file ConjGradientOptimizer.h. Referenced by fletcherSearch(), gSearch(), optimize(), optimizeN(), and reset(). |

|

|

Definition at line 112 of file ConjGradientOptimizer.h. Referenced by build_(), computeCostAndDerivative(), computeDerivative(), conjpomdp(), daiYuan(), findDirection(), fletcherReeves(), gSearch(), hestenesStiefel(), optimizeN(), polakRibiere(), and updateSearchDirection(). |

|

|

Definition at line 88 of file ConjGradientOptimizer.h. Referenced by gSearch(). |

|

|

The formula used to find the new search direction 1 : ConjPOMPD 2 : Dai - Yuan 3 : Fletcher - Reeves 4 : Hestenes - Stiefel 5 : Polak - Ribiere.

Definition at line 82 of file ConjGradientOptimizer.h. Referenced by findDirection(). |

|

|

Definition at line 93 of file ConjGradientOptimizer.h. Referenced by fletcherSearch(). |

|

|

Definition at line 99 of file ConjGradientOptimizer.h. Referenced by lineSearch(). |

|

|

Definition at line 114 of file ConjGradientOptimizer.h. Referenced by computeCostAndDerivative(), computeCostValue(), optimize(), and optimizeN(). |

|

|

Definition at line 113 of file ConjGradientOptimizer.h. Referenced by optimize(), optimizeN(), and reset(). |

|

|

The line search algorithm used 1 : Fletcher line search 2 : GSearch 3 : Newton line search.

Definition at line 75 of file ConjGradientOptimizer.h. Referenced by lineSearch(). |

|

|

Definition at line 100 of file ConjGradientOptimizer.h. Referenced by lineSearch(). |

|

|

Definition at line 98 of file ConjGradientOptimizer.h. Referenced by lineSearch(). |

|

|

Definition at line 104 of file ConjGradientOptimizer.h. Referenced by build_(), optimize(), optimizeN(), and printStep(). |

|

|

Definition at line 84 of file ConjGradientOptimizer.h. Referenced by findDirection(). |

|

|

Definition at line 92 of file ConjGradientOptimizer.h. Referenced by fletcherSearch(). |

|

|

Definition at line 110 of file ConjGradientOptimizer.h. Referenced by build_(), computeCostAndDerivative(), computeCostValue(), computeDerivative(), conjpomdp(), daiYuan(), gSearch(), hestenesStiefel(), lineSearch(), optimize(), optimizeN(), and updateSearchDirection(). |

|

|

Definition at line 91 of file ConjGradientOptimizer.h. Referenced by fletcherSearch(). |

|

|

Definition at line 83 of file ConjGradientOptimizer.h. Referenced by reset(). |

|

|

Definition at line 94 of file ConjGradientOptimizer.h. Referenced by fletcherSearch(), optimize(), and optimizeN(). |

|

|

Definition at line 95 of file ConjGradientOptimizer.h. Referenced by fletcherSearch(). |

|

|

Definition at line 95 of file ConjGradientOptimizer.h. Referenced by fletcherSearch(). |

|

|

Definition at line 95 of file ConjGradientOptimizer.h. Referenced by fletcherSearch(). |

|

|

Definition at line 111 of file ConjGradientOptimizer.h. Referenced by build_(), computeCostAndDerivative(), computeCostValue(), computeDerivative(), conjpomdp(), daiYuan(), gSearch(), hestenesStiefel(), and polakRibiere(). |

1.3.7

1.3.7