#include <Learner.h>

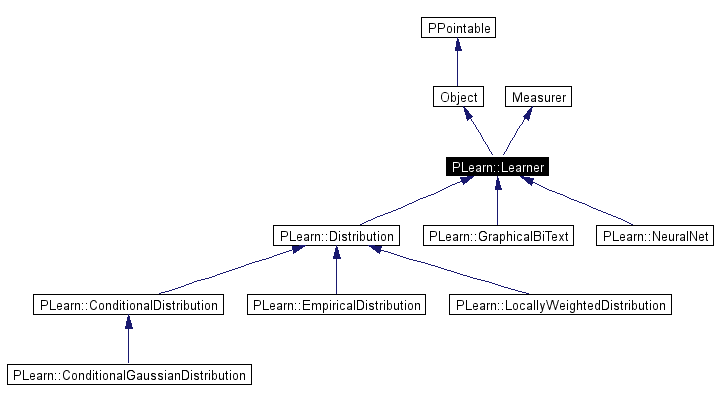

Inheritance diagram for PLearn::Learner:

Public Types | |

| typedef Object | inherited |

Public Member Functions | |

| string | basename () const |

| returns expdir+train_set->getAlias() (if train_set is indeed defined and has an alias...) | |

| Learner (int the_inputsize=0, int the_targetsize=0, int the_outputsize=0) | |

| virtual | ~Learner () |

| virtual void | setExperimentDirectory (const string &the_expdir) |

| The experiment directory is the directory in which files related to this model are to be saved. | |

| string | getExperimentDirectory () const |

| PLEARN_DECLARE_ABSTRACT_OBJECT (Learner) | |

| Does the necessary operations to transform a shallow copy (this) into a deep copy by deep-copying all the members that need to be. | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| virtual void | build () |

| **** SUBCLASS WRITING: **** This method should be redefined in subclasses, to just call inherited::build() and then build_() | |

| virtual void | setTrainingSet (VMat training_set) |

| Declare the train_set. | |

| VMat | getTrainingSet () |

| virtual void | train (VMat training_set)=0 |

| virtual void | newtrain (VecStatsCollector &train_stats) |

| virtual void | newtest (VMat testset, VecStatsCollector &test_stats, VMat testoutputs=0, VMat testcosts=0) |

| Should perform test on testset, updating test cost statistics, and optionally filling testoutputs and testcosts. | |

| virtual void | train (VMat training_set, VMat accept_prob, real max_accept_prob=1.0, VMat weights=VMat()) |

| virtual void | use (const Vec &input, Vec &output)=0 |

| virtual void | use (const Mat &inputs, Mat outputs) |

| virtual void | computeOutput (const VVec &input, Vec &output) |

| *** SUBCLASS WRITING: *** This should be overloaded in subclasses to compute the output from the input | |

| virtual void | computeCostsFromOutputs (const VVec &input, const Vec &output, const VVec &target, const VVec &weight, Vec &costs) |

| *** SUBCLASS WRITING: *** This should be overloaded in subclasses to compute the weighted costs from already computed output. | |

| virtual void | computeOutputAndCosts (const VVec &input, VVec &target, const VVec &weight, Vec &output, Vec &costs) |

| Default calls computeOutput and computeCostsFromOutputs You may overload this if you have a more efficient way to compute both output and weighted costs at the same time. | |

| virtual void | computeCosts (const VVec &input, VVec &target, VVec &weight, Vec &costs) |

| Default calls computeOutputAndCosts This may be overloaded if there is a more efficient way to compute the costs directly, without computing the whole output vector. | |

| virtual void | setModel (const Vec &new_options) |

| virtual void | forget () |

| virtual bool | measure (int step, const Vec &costs) |

| virtual void | oldwrite (ostream &out) const |

| virtual void | oldread (istream &in) |

| DEPRECATED For backward compatibility with old saved object. | |

| void | save (const string &filename="") const |

| DEPRECATED. Call PLearn::save(filename, object) instead. | |

| void | load (const string &filename="") |

| DEPRECATED. Call PLearn::load(filename, object) instead. | |

| virtual void | stop_if_wanted () |

| stopping condition, by default when a file named experiment_name + "_stop" is found to exist. | |

| int | inputsize () const |

| Simple accessor methods: (do NOT overload! Set inputsize_ and outputsize_ instead). | |

| int | targetsize () const |

| int | outputsize () const |

| int | weightsize () const |

| int | epoch () const |

| virtual int | costsize () const |

| **** SUBCLASS WRITING: should be re-defined if user re-defines computeCost default version returns | |

| void | setTestCostFunctions (Array< CostFunc > costfunctions) |

| Call this method to define what cost functions are computed by default (these are generic cost functions which compare the output with the target). | |

| void | setTestStatistics (StatsItArray statistics) |

| This method defines what statistics are computed on the costs (which compute a vector of statistics that depend on all the test costs). | |

| virtual void | setTestDuringTrain (ostream &testout, int every, Array< VMat > testsets) |

| testout: the stream where the test results are to be written every: how often (number of iterations) the tests should be performed | |

| virtual void | setTestDuringTrain (Array< VMat > testsets) |

| const Array< VMat > & | getTestDuringTrain () const |

| return the test sets that are used during training | |

| void | setEarlyStopping (int which_testset, int which_testresult, real max_degradation, real min_value=-FLT_MAX, real min_improvement=0, bool relative_changes=true, bool save_best=true, int max_degraded_steps=-1) |

| virtual void | computeCost (const Vec &input, const Vec &target, const Vec &output, const Vec &cost) |

| computes the cost vec, given input, target and output The default version applies the declared CostFunc's on the (output,target) pair, putting the cost computed for each CostFunc in an element of the cost vector. | |

| virtual void | useAndCost (const Vec &input, const Vec &target, Vec output, Vec cost) |

| By default this function calls use(input, output) and then computeCost(input, target, output, cost) So you can overload computeCost to change cost computation. | |

| virtual void | useAndCostOnTestVec (const VMat &test_set, int i, const Vec &output, const Vec &cost) |

| Default version calls useAndCost on test_set(i) so you don't need to overload this method unless you want to provide a more efficient implementation (for ex. | |

| virtual void | apply (const VMat &data, VMat outputs) |

| virtual void | applyAndComputeCosts (const VMat &data, VMat outputs, VMat costs) |

| virtual void | applyAndComputeCostsOnTestMat (const VMat &test_set, int i, const Mat &output_block, const Mat &cost_block) |

| Like useAndCostOnTestVec, but on a block (of length minibatch_size) of rows from the test set: apply learner and compute outputs and costs for the block of test_set rows starting at i. | |

| virtual void | computeCosts (const VMat &data, VMat costs) |

| virtual void | computeLeaveOneOutCosts (const VMat &data, VMat costs) |

| For each data point i, trains with dataset removeRow(data,i) and calls useAndCost on point i, puts results in costs vmat. | |

| virtual void | computeLeaveOneOutCosts (const VMat &data, VMat costsmat, CostFunc costf) |

| Vec | computeTestStatistics (const VMat &costs) |

| virtual Vec | test (VMat test_set, const string &save_test_outputs="", const string &save_test_costs="") |

| This function should work with and without MPI. | |

| virtual Array< string > | costNames () const |

| virtual Array< string > | testResultsNames () const |

| virtual Array< string > | trainObjectiveNames () const |

| returns an array of strings corresponding to the names of the fields that will be written to objectiveout (by default this calls testResultsNames() ) | |

| void | appendMeasurer (Measurer &measurer) |

| Vec | getTrainCost () |

Static Public Member Functions | |

| PStream & | default_vlog () |

| The default stream to which lout is set upon construction of all Learners (defaults to cout). | |

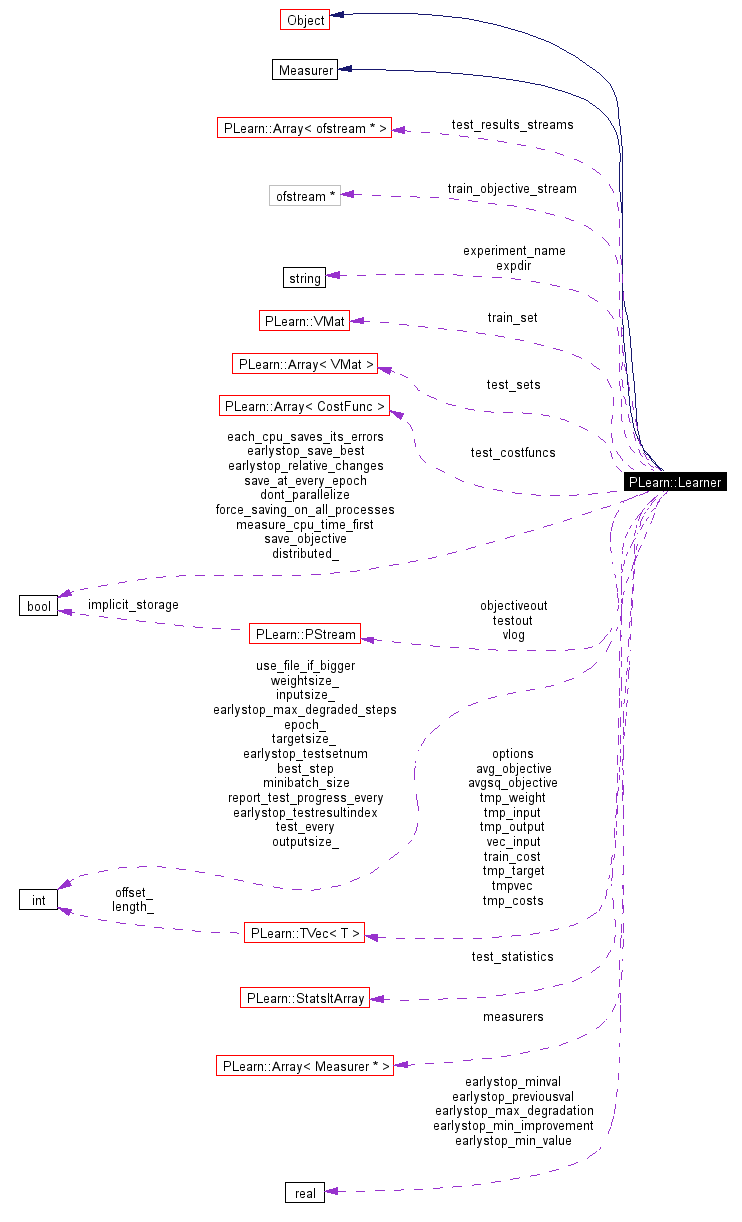

Public Attributes | |

| int | inputsize_ |

| The data VMat's are assumed to be formed of inputsize(). | |

| int | targetsize_ |

| columns followed by targetsize() columns. | |

| int | outputsize_ |

| the use() method produces an output vector of size outputsize(). | |

| int | weightsize_ |

| bool | dont_parallelize |

| By default, MPI parallelization done at given level prevents further parallelization at lower levels. | |

| PStream | testout |

| test during train specifications | |

| int | test_every |

| Vec | avg_objective |

| average of the objective function(s) over the last test_every steps | |

| Vec | avgsq_objective |

| average of the squared objective function(s) over the last test_every steps | |

| VMat | train_set |

| the current set being used for training | |

| Array< VMat > | test_sets |

| test sets to test on during train | |

| int | minibatch_size |

| test by blocks of this size using apply rather than use | |

| int | report_test_progress_every |

| Vec | options |

| DEPRECATED options in the construction of the model through setModel. | |

| int | earlystop_testsetnum |

| index of test set (in test_sets) to use for early stopping | |

| int | earlystop_testresultindex |

| index of statistic (as returned by test) to use | |

| real | earlystop_max_degradation |

| maximum degradation in error from last best value | |

| real | earlystop_min_value |

| minimum error beyond which we stop | |

| real | earlystop_min_improvement |

| minimum improvement in error otherwise we stop | |

| bool | earlystop_relative_changes |

| are max_degradation and min_improvement relative? | |

| bool | earlystop_save_best |

| if yes, then return with saved "best" model | |

| int | earlystop_max_degraded_steps |

| max. nb of steps beyond best found [in version >= 1] | |

| bool | save_at_every_epoch |

| save learner at each epoch? | |

| bool | save_objective |

| int | best_step |

| the step (usually epoch) at which validation cost was best | |

| real | earlystop_minval |

| string | experiment_name |

| Array< CostFunc > | test_costfuncs |

| StatsItArray | test_statistics |

| PStream | vlog |

| The log stream to which all the verbose output from this learner should be sent. | |

| PStream | objectiveout |

| The log stream to use to record the objective function during training. | |

| Vec | vec_input |

| **Next generation** learners allow inputs to be anything, not just Vec | |

Static Public Attributes | |

| int | use_file_if_bigger = 64000000L |

| number of elements above which a file VMatrix rather | |

| bool | force_saving_on_all_processes = false |

| otherwise in MPI only CPU0 actually saves | |

Protected Member Functions | |

| void | openTrainObjectiveStream () |

| opens the train.objective file for appending in the expdir | |

| ostream & | getTrainObjectiveStream () |

| resturns the stream for writing train objective (and other costs) The stream is opened by calling openTrainObjectivestream if it wasn't already | |

| void | openTestResultsStreams () |

| opens the files in append mode for writing the test results | |

| ostream & | getTestResultsStream (int k) |

| Returns the stream corresponding to testset k (as specified by setTestDuringTrain) The stream is opened by calling opentestResultsStreams if it wasn's already. | |

| void | freeTestResultsStreams () |

| frees the resources used by the test_results_streams | |

| void | outputResultLineToFile (const string &filename, const Vec &results, bool append, const string &names) |

| output a test result line to a file | |

| void | setTrainCost (Vec &cost) |

Static Protected Member Functions | |

| void | declareOptions (OptionList &ol) |

| redefine this in subclasses: call declareOption(...) for each option, and then call inherited::declareOptions(options) ( see the declareOption function further down) | |

Protected Attributes | |

| Vec | tmpvec |

| ofstream * | train_objective_stream |

| file stream where to save objecties and costs during training | |

| Array< ofstream * > | test_results_streams |

| opened streams where to save test results | |

| string | expdir |

| the directory in which to save files related to this model (see setExperimentDirectory()) You may assume that it ends with a slash (setExperimentDirectory(...) ensures this). | |

| int | epoch_ |

| It's used as part of the model filename saved by calling save(), which measure() does if ??? incomplete ??? | |

| bool | distributed_ |

| This is set to true to indicate that MPI parallelization occured at the level of this learner possibly with data distributed across several nodes (in which case PLMPI::synchronized should be false) (this is initially false). | |

| real | earlystop_previousval |

| temporary values relevant for early stopping | |

| Array< Measurer * > | measurers |

| array of measurers: | |

| bool | measure_cpu_time_first |

| bool | each_cpu_saves_its_errors |

| Vec | train_cost |

Private Member Functions | |

| void | build_ () |

Static Private Attributes | |

| Vec | tmp_input |

| Vec | tmp_target |

| Vec | tmp_weight |

| Vec | tmp_output |

| Vec | tmp_costs |

The main thing that a Learner can do are: void train(VMat training_set); < get trained void use(const Vec& input, Vec& output); < compute output given input Vec test(VMat test_set); < compute some performance statistics on a test set < compute outputs and costs when applying trained model on data void applyAndComputeCosts(const VMat& data, VMat outputs, VMat costs);

Definition at line 72 of file Learner.h.

|

|

Reimplemented from PLearn::Object. Reimplemented in PLearn::ConditionalDistribution, PLearn::ConditionalGaussianDistribution, PLearn::Distribution, PLearn::EmpiricalDistribution, PLearn::LocallyWeightedDistribution, PLearn::NeuralNet, and PLearn::GraphicalBiText. |

|

||||||||||||||||

|

**** SUBCLASS WRITING: **** All subclasses of Learner should implement this form of constructor Constructors should simply set all build options (member variables) to acceptable values and call build() that will do the actual job of constructing the object. Definition at line 74 of file Learner.cc. References default_vlog(), PLearn::mean_stats(), measure_cpu_time_first, minibatch_size, report_test_progress_every, setEarlyStopping(), setTestStatistics(), PLearn::stderr_stats(), test_every, and vlog. |

|

|

Definition at line 365 of file Learner.cc. References freeTestResultsStreams(), and train_objective_stream. |

|

|

Declare a new measurer whose measure method will be called when the measure method of this learner is called (in particular after each training epoch). Definition at line 552 of file Learner.h. References PLearn::TVec< Measurer * >::append(), and measurers. |

|

||||||||||||

|

Calls the 'use' method many times on the first inputsize() elements of each row of a 'data' VMat, and put the machine's 'outputs' in a writable VMat (e.g. maybe a file, or a matrix). Note: if one wants to compute costs as well, then the method applyAndComputeCosts should be called instead. Definition at line 522 of file Learner.cc. References inputsize(), PLearn::VMat::length(), outputsize(), PLearn::TVec< T >::subVec(), PLearn::use(), and PLearn::VMat::width(). |

|

||||||||||||||||

|

This method calls useAndCost repetitively on all the rows of data, putting all the resulting output and cost vectors in the outputs and costs VMat's. Definition at line 599 of file Learner.cc. References costsize(), k, PLearn::VMat::length(), minibatch_size, outputsize(), PLearn::TVec< T >::subVec(), and useAndCostOnTestVec(). Referenced by applyAndComputeCostsOnTestMat(). |

|

||||||||||||||||||||

|

Like useAndCostOnTestVec, but on a block (of length minibatch_size) of rows from the test set: apply learner and compute outputs and costs for the block of test_set rows starting at i. By default calls applyAndComputeCosts. Definition at line 807 of file Learner.cc. References applyAndComputeCosts(), PLearn::TMat< T >::length(), and PLearn::VMat::subMatRows(). Referenced by test(). |

|

|

returns expdir+train_set->getAlias() (if train_set is indeed defined and has an alias...)

Definition at line 118 of file Learner.cc. References c_str(), PLearn::Object::classname(), expdir, experiment_name, PLERROR, PLWARNING, and train_set. Referenced by measure(), and stop_if_wanted(). |

|

|

**** SUBCLASS WRITING: **** This method should be redefined in subclasses, to just call inherited::build() and then build_()

Reimplemented from PLearn::Object. Reimplemented in PLearn::ConditionalGaussianDistribution, PLearn::Distribution, PLearn::LocallyWeightedDistribution, PLearn::NeuralNet, and PLearn::GraphicalBiText. Definition at line 236 of file Learner.cc. References build_(). |

|

|

**** SUBCLASS WRITING: **** The build_ and build methods should be redefined in subclasses build_ should do the actual building of the Learner according to build options (member variables) previously set. (These may have been set by hand, by a constructor, by the load method, or by setOption) As build() may be called several times (after changing options, to "rebuild" an object with different build options), make sure your implementation can handle this properly. Reimplemented from PLearn::Object. Reimplemented in PLearn::Distribution, PLearn::LocallyWeightedDistribution, PLearn::NeuralNet, and PLearn::GraphicalBiText. Definition at line 229 of file Learner.cc. References earlystop_minval, and earlystop_previousval. Referenced by build(). |

|

||||||||||||||||||||

|

computes the cost vec, given input, target and output The default version applies the declared CostFunc's on the (output,target) pair, putting the cost computed for each CostFunc in an element of the cost vector. If you overload this method in subclasses (e.g. to compute a cost that depends on the internal elements of the model), you must also redefine costsize() and costNames() accordingly. Reimplemented in PLearn::NeuralNet. Definition at line 280 of file Learner.cc. References k, PLearn::TVec< CostFunc >::size(), and test_costfuncs. Referenced by computeCostsFromOutputs(), and useAndCost(). |

|

||||||||||||

|

This method calls useAndCost repetitively on all the rows of data, throwing away the resulting output vectors but putting all the cost vectors in the costs VMat. Definition at line 539 of file Learner.cc. References costsize(), PLearn::endl(), PLearn::VMat::length(), minibatch_size, outputsize(), and useAndCostOnTestVec(). |

|

||||||||||||||||||||

|

Default calls computeOutputAndCosts This may be overloaded if there is a more efficient way to compute the costs directly, without computing the whole output vector.

Definition at line 998 of file Learner.cc. References computeOutputAndCosts(), outputsize(), PLearn::TVec< T >::resize(), and tmp_output. |

|

||||||||||||||||||||||||

|

*** SUBCLASS WRITING: *** This should be overloaded in subclasses to compute the weighted costs from already computed output.

Definition at line 966 of file Learner.cc. References computeCost(), PLearn::TVec< T >::length(), PLearn::VVec::length(), PLERROR, PLearn::TVec< T >::resize(), tmp_input, tmp_target, and tmp_weight. Referenced by computeOutputAndCosts(). |

|

||||||||||||||||

|

Same as above, except a single cost passed as argument is computed, rather than all the Learner's costs setTestCostFunctions (and its possible additional internal cost). Definition at line 574 of file Learner.cc. References PLearn::CostFunc, PLearn::flush(), inputsize(), PLearn::VMat::length(), outputsize(), PLERROR, PLearn::removeRow(), PLearn::TVec< T >::subVec(), targetsize(), train(), PLearn::use(), vlog, and PLearn::VMat::width(). |

|

||||||||||||

|

For each data point i, trains with dataset removeRow(data,i) and calls useAndCost on point i, puts results in costs vmat.

Definition at line 553 of file Learner.cc. References costsize(), PLearn::flush(), PLearn::VMat::length(), outputsize(), PLearn::removeRow(), train(), useAndCostOnTestVec(), and vlog. |

|

||||||||||||

|

*** SUBCLASS WRITING: *** This should be overloaded in subclasses to compute the output from the input

Definition at line 956 of file Learner.cc. References PLearn::VVec::length(), PLearn::TVec< T >::resize(), tmp_input, and PLearn::use(). Referenced by computeOutputAndCosts(). |

|

||||||||||||||||||||||||

|

Default calls computeOutput and computeCostsFromOutputs You may overload this if you have a more efficient way to compute both output and weighted costs at the same time.

Definition at line 991 of file Learner.cc. References computeCostsFromOutputs(), and computeOutput(). Referenced by computeCosts(). |

|

|

Given a VMat of costs as computed for example with computeCosts or with applyAndComputeCosts, compute and the test statistics over those costs. This is the concatenation of the statistics computed for each of the columns (cost functions) of costs. Definition at line 620 of file Learner.cc. References PLearn::StatsItArray::computeStats(), PLearn::concat(), and test_statistics. |

|

|

returns an Array of strings for the names of the components of the cost. Default version returns the info() strings of the cost functions in test_costfuncs Reimplemented in PLearn::NeuralNet. Definition at line 821 of file Learner.cc. References PLearn::Object::info(), PLearn::TVec< T >::size(), PLearn::TVec< CostFunc >::size(), PLearn::space_to_underscore(), and test_costfuncs. Referenced by testResultsNames(). |

|

|

**** SUBCLASS WRITING: should be re-defined if user re-defines computeCost default version returns

Reimplemented in PLearn::NeuralNet. Definition at line 818 of file Learner.cc. References PLearn::TVec< CostFunc >::size(), and test_costfuncs. Referenced by applyAndComputeCosts(), computeCosts(), computeLeaveOneOutCosts(), and test(). |

|

|

redefine this in subclasses: call declareOption(...) for each option, and then call inherited::declareOptions(options) ( see the declareOption function further down) ex: static void declareOptions(OptionList& ol) { declareOption(ol, "inputsize", &MyObject::inputsize_, OptionBase::buildoption, "the size of the input\n it must be provided"); declareOption(ol, "weights", &MyObject::weights, OptionBase::learntoption, "the learnt model weights"); inherited::declareOptions(ol); } Reimplemented from PLearn::Object. Reimplemented in PLearn::ConditionalGaussianDistribution, PLearn::Distribution, PLearn::EmpiricalDistribution, PLearn::LocallyWeightedDistribution, PLearn::NeuralNet, and PLearn::GraphicalBiText. Definition at line 143 of file Learner.cc. References PLearn::declareOption(), and PLearn::OptionList. |

|

|

The default stream to which lout is set upon construction of all Learners (defaults to cout).

Definition at line 64 of file Learner.cc. References PLearn::PStream::outmode. Referenced by Learner(). |

|

|

Definition at line 406 of file Learner.h. References epoch_. Referenced by measure(). |

|

|

*** SUBCLASS WRITING: *** This method should be called AFTER or inside the build method, e.g. in order to re-initialize parameters. It should put the Learner in a 'fresh' state, not being influenced by any past call to train (everything learned is forgotten!). Reimplemented in PLearn::NeuralNet. Definition at line 242 of file Learner.cc. References earlystop_minval, earlystop_previousval, and epoch_. |

|

|

frees the resources used by the test_results_streams

Definition at line 345 of file Learner.cc. References k, PLearn::TVec< ofstream * >::resize(), PLearn::TVec< ofstream * >::size(), and test_results_streams. Referenced by openTestResultsStreams(), and ~Learner(). |

|

|

Definition at line 229 of file Learner.h. References expdir. |

|

|

return the test sets that are used during training

Definition at line 433 of file Learner.h. References test_sets. |

|

|

Returns the stream corresponding to testset k (as specified by setTestDuringTrain) The stream is opened by calling opentestResultsStreams if it wasn's already.

Definition at line 354 of file Learner.cc. References k, openTestResultsStreams(), PLearn::TVec< ofstream * >::size(), and test_results_streams. |

|

|

Definition at line 562 of file Learner.h. References train_cost. |

|

|

Definition at line 255 of file Learner.h. References train_set. |

|

|

resturns the stream for writing train objective (and other costs) The stream is opened by calling openTrainObjectivestream if it wasn't already

Definition at line 312 of file Learner.cc. References openTrainObjectiveStream(), and train_objective_stream. |

|

|

Simple accessor methods: (do NOT overload! Set inputsize_ and outputsize_ instead).

Definition at line 402 of file Learner.h. References inputsize_. Referenced by apply(), PLearn::NeuralNet::build_(), PLearn::compute2dGridOutputs(), computeLeaveOneOutCosts(), PLearn::displayDecisionSurface(), PLearn::NeuralNet::initializeParams(), PLearn::LocallyWeightedDistribution::log_density(), PLearn::LocallyWeightedDistribution::train(), PLearn::Distribution::train(), and useAndCostOnTestVec(). |

|

|

DEPRECATED. Call PLearn::load(filename, object) instead.

Reimplemented from PLearn::Object. Definition at line 912 of file Learner.cc. References experiment_name, and PLERROR. |

|

|

Does the necessary operations to transform a shallow copy (this) into a deep copy by deep-copying all the members that need to be. Typical implementation: void CLASS_OF_THIS::makeDeepCopyFromShallowCopy(CopiesMap& copies) { SUPERCLASS_OF_THIS::makeDeepCopyFromShallowCopy(copies); member_ptr = member_ptr->deepCopy(copies); member_smartptr = member_smartptr->deepCopy(copies); member_mat.makeDeepCopyFromShallowCopy(copies); member_vec.makeDeepCopyFromShallowCopy(copies); ... } Reimplemented from PLearn::Object. Reimplemented in PLearn::EmpiricalDistribution, and PLearn::NeuralNet. Definition at line 89 of file Learner.cc. References avg_objective, avgsq_objective, PLearn::CopiesMap, PLearn::deepCopyField(), test_costfuncs, and test_statistics. |

|

||||||||||||

|

**** SUBCLASS WRITING: This method should be called by iterative training algorithm's train method after each training step (meaning of training step is learner-dependent) passing it the current step number and the costs relevant for the training process. Training must be stopped if the returned value is true: it indicates early-stopping criterion has been met. Default version writes step and costs to objectiveout stream at each step Default version also performs the tests specified by setTestDuringTrain every 'test_every' steps and decides upon early-stopping as specified by setEarlyStopping. Default version also calls the measure method of all measurers that have been declared for addition with appendMeasurer This is the measure method from Measurer. You may override this method if you wish to measure other things during the training. In this case your method will probably want to call this default version (Learner::measure) as part of it. Reimplemented from PLearn::Measurer. Definition at line 398 of file Learner.cc. References PLearn::abs(), basename(), best_step, each_cpu_saves_its_errors, earlystop_max_degradation, earlystop_max_degraded_steps, earlystop_min_improvement, earlystop_min_value, earlystop_minval, earlystop_previousval, earlystop_relative_changes, earlystop_save_best, earlystop_testresultindex, earlystop_testsetnum, PLearn::endl(), epoch(), epoch_, expdir, fname, PLearn::join(), PLearn::TVec< T >::length(), PLearn::load(), measurers, minibatch_size, outputResultLineToFile(), PLERROR, PLearn::save(), save_at_every_epoch, save_objective, PLearn::TVec< Measurer * >::size(), PLearn::TVec< VMat >::size(), test(), test_every, test_sets, testResultsNames(), PLearn::tostring(), trainObjectiveNames(), and vlog. |

|

||||||||||||||||||||

|

Should perform test on testset, updating test cost statistics, and optionally filling testoutputs and testcosts.

Definition at line 1010 of file Learner.cc. References PLERROR. |

|

|

*** SUBCLASS WRITING: *** Should do the actual training until epoch==nepochs and should call update on the stats with training costs measured on-line Definition at line 1006 of file Learner.cc. References PLERROR. |

|

|

DEPRECATED For backward compatibility with old saved object.

Reimplemented from PLearn::Object. Definition at line 868 of file Learner.cc. References earlystop_max_degradation, earlystop_max_degraded_steps, earlystop_min_improvement, earlystop_min_value, earlystop_relative_changes, earlystop_save_best, earlystop_testresultindex, earlystop_testsetnum, epoch_, expdir, experiment_name, inputsize_, outputsize_, PLearn::readField(), PLearn::readFooter(), PLearn::readHeader(), save_at_every_epoch, targetsize_, test_costfuncs, test_every, and test_statistics. |

|

|

*** SUBCLASS WRITING: *** This matched pair of Object functions needs to be redefined by sub-classes. They are used for saving/loading a model to memory or to file. However, subclasses can call this one to deal with the saving/loading of the following data fields: the current options and the early stopping parameters. Definition at line 846 of file Learner.cc. References earlystop_max_degradation, earlystop_max_degraded_steps, earlystop_min_improvement, earlystop_min_value, earlystop_relative_changes, earlystop_save_best, earlystop_testresultindex, earlystop_testsetnum, experiment_name, inputsize_, outputsize_, save_at_every_epoch, targetsize_, test_costfuncs, test_every, test_statistics, PLearn::writeField(), PLearn::writeFooter(), and PLearn::writeHeader(). |

|

|

opens the files in append mode for writing the test results

Definition at line 320 of file Learner.cc. References PLearn::endl(), expdir, freeTestResultsStreams(), PLearn::join(), k, PLERROR, PLearn::TVec< ofstream * >::resize(), PLearn::TVec< VMat >::size(), test_results_streams, test_sets, and testResultsNames(). Referenced by getTestResultsStream(). |

|

|

opens the train.objective file for appending in the expdir

Definition at line 294 of file Learner.cc. References PLearn::endl(), expdir, PLearn::join(), PLERROR, train_objective_stream, and trainObjectiveNames(). Referenced by getTrainObjectiveStream(). |

|

||||||||||||||||||||

|

output a test result line to a file

Definition at line 101 of file Learner.cc. References PLearn::endl(), epoch_, and fname. Referenced by measure(). |

|

|

Definition at line 404 of file Learner.h. References outputsize_. Referenced by apply(), applyAndComputeCosts(), PLearn::NeuralNet::build_(), PLearn::compute2dGridOutputs(), computeCosts(), computeLeaveOneOutCosts(), PLearn::displayDecisionSurface(), and test(). |

|

|

Does the necessary operations to transform a shallow copy (this) into a deep copy by deep-copying all the members that need to be.

|

|

|

DEPRECATED. Call PLearn::save(filename, object) instead.

Reimplemented from PLearn::Object. Definition at line 898 of file Learner.cc. References experiment_name, force_saving_on_all_processes, and PLERROR. |

|

||||||||||||||||||||||||||||||||||||

|

which_testset and which_testresult select the appropriate testset and costfunction to base early-stopping on from those that were specified in setTestDuringTrain degradation is the difference between the current value and the smallest value ever attained, training will be stopped if it grows beyond max_degradation training will be stopped if current value goes below min_value training will be stopped if difference between previous value and current value is below min_improvement if (relative_changes) is true then max_degradation is relative to the smallest value ever attained, and min_improvement is relative to the previous value. if (save_best) then save the lowest validation error model (with the write method, to memory), and if early stopping occurs reload this saved model (with the read method). Definition at line 381 of file Learner.cc. References earlystop_max_degradation, earlystop_max_degraded_steps, earlystop_min_improvement, earlystop_min_value, earlystop_minval, earlystop_previousval, earlystop_relative_changes, earlystop_save_best, earlystop_testresultindex, and earlystop_testsetnum. Referenced by Learner(). |

|

|

The experiment directory is the directory in which files related to this model are to be saved. Typically, the following files will be saved in that directory: model.psave (saved best model) model#.psave (model saved after epoch #) model#.<trainset_alias>.objective (training objective and costs after each epoch) model#.<testset_alias>.results (test results after each epoch) Definition at line 215 of file Learner.cc. References PLearn::abspath(), expdir, PLearn::force_mkdir(), and PLERROR. |

|

|

** DEPRECATED ** Do not use! use the setOption and build methods instead Definition at line 814 of file Learner.cc. References PLERROR. |

|

|

Call this method to define what cost functions are computed by default (these are generic cost functions which compare the output with the target).

Definition at line 415 of file Learner.h. References test_costfuncs. |

|

|

Definition at line 362 of file Learner.cc. References test_sets. |

|

||||||||||||||||

|

testout: the stream where the test results are to be written every: how often (number of iterations) the tests should be performed

Definition at line 287 of file Learner.cc. References test_every, test_sets, and testout. |

|

|

This method defines what statistics are computed on the costs (which compute a vector of statistics that depend on all the test costs).

Definition at line 420 of file Learner.h. References setTestStatistics(), and test_statistics. Referenced by Learner(), and setTestStatistics(). |

|

|

Definition at line 558 of file Learner.h. References PLearn::TVec< T >::length(), PLearn::TVec< T >::resize(), setTrainCost(), and train_cost. Referenced by setTrainCost(). |

|

|

Declare the train_set.

Definition at line 254 of file Learner.h. References setTrainingSet(), and train_set. Referenced by setTrainingSet(). |

|

|

stopping condition, by default when a file named experiment_name + "_stop" is found to exist. If that is the case then this file is removed and exit(0) is performed. Definition at line 922 of file Learner.cc. References basename(), PLearn::endl(), PLearn::file_exists(), fname, PLearn::save(), PLearn::tostring(), and vlog. Referenced by test(). |

|

|

Definition at line 403 of file Learner.h. References targetsize_. Referenced by PLearn::NeuralNet::build_(), computeLeaveOneOutCosts(), PLearn::Distribution::train(), and useAndCostOnTestVec(). |

|

||||||||||||||||

|

This function should work with and without MPI. Return statistics computed by test_statistics on the test_costfuncs. If (save_test_outputs) then the test outputs are saved in the given file, and similary if (save_test_costs). Definition at line 635 of file Learner.cc. References PLearn::TmpFilenames::addFilename(), applyAndComputeCostsOnTestMat(), PLearn::binread(), PLearn::binwrite(), PLearn::StatsItArray::computeStats(), PLearn::concat(), costsize(), PLearn::TVec< T >::data(), dont_parallelize, PLearn::StatsItArray::finish(), PLearn::StatsItArray::getResults(), PLearn::StatsItArray::init(), PLearn::TVec< T >::length(), PLearn::VMat::length(), PLearn::Mat, minibatch_size, outputsize(), PLearn::StatsItArray::requiresMultiplePasses(), PLearn::TVec< T >::resize(), PLearn::TMat< T >::resize(), stop_if_wanted(), test_statistics, PLearn::StatsItArray::update(), use_file_if_bigger, useAndCostOnTestVec(), and vlog. Referenced by measure(). |

|

|

returns an Array of strings for the names of the cost statistics returned by methods test and computeTestStatistics. Default version returns a cross product between the info() strings of test_statistics and the cost names returned by costNames() Definition at line 829 of file Learner.cc. References costNames(), k, PLearn::TVec< T >::size(), PLearn::TVec< StatsIt >::size(), PLearn::space_to_underscore(), and test_statistics. Referenced by measure(), openTestResultsStreams(), PLearn::prettyprint_test_results(), and trainObjectiveNames(). |

|

||||||||||||||||||||

|

*** SUBCLASS WRITING: *** Does the actual training. Permit to train from a sampling of a training set. Definition at line 292 of file Learner.h. References PLERROR. |

|

|

*** SUBCLASS WRITING: *** Does the actual training. Subclasses must implement this method. The method should upon entry, call setTrainingSet(training_set); Make sure that a if(measure(step, objective_value)) is done after each training step, and that training is stopped if it returned true Implemented in PLearn::ConditionalGaussianDistribution, PLearn::Distribution, PLearn::EmpiricalDistribution, PLearn::LocallyWeightedDistribution, PLearn::NeuralNet, and PLearn::GraphicalBiText. Referenced by computeLeaveOneOutCosts(). |

|

|

returns an array of strings corresponding to the names of the fields that will be written to objectiveout (by default this calls testResultsNames() )

Definition at line 843 of file Learner.cc. References testResultsNames(). Referenced by measure(), and openTrainObjectiveStream(). |

|

||||||||||||

|

Definition at line 302 of file Learner.h. References PLearn::TMat< T >::length(), and PLearn::use(). |

|

||||||||||||

|

*** SUBCLASS WRITING: *** Uses a trained decider on input, filling output. If the cost should also be computed, then the user should call useAndCost instead of this method. Implemented in PLearn::ConditionalDistribution, PLearn::Distribution, PLearn::NeuralNet, and PLearn::GraphicalBiText. Referenced by PLearn::compute2dGridOutputs(). |

|

||||||||||||||||||||

|

By default this function calls use(input, output) and then computeCost(input, target, output, cost) So you can overload computeCost to change cost computation.

Reimplemented in PLearn::NeuralNet. Definition at line 274 of file Learner.cc. References computeCost(), and PLearn::use(). Referenced by useAndCostOnTestVec(). |

|

||||||||||||||||||||

|

Default version calls useAndCost on test_set(i) so you don't need to overload this method unless you want to provide a more efficient implementation (for ex. if you have precomputed things for the test_set that you can use). Definition at line 250 of file Learner.cc. References inputsize(), k, minibatch_size, PLearn::TVec< T >::resize(), PLearn::TVec< T >::subVec(), targetsize(), tmpvec, useAndCost(), and PLearn::VMat::width(). Referenced by applyAndComputeCosts(), computeCosts(), computeLeaveOneOutCosts(), and test(). |

|

|

Definition at line 405 of file Learner.h. References weightsize_. Referenced by PLearn::NeuralNet::build_(), PLearn::LocallyWeightedDistribution::build_(), PLearn::LocallyWeightedDistribution::log_density(), and PLearn::LocallyWeightedDistribution::train(). |

|

|

average of the objective function(s) over the last test_every steps

Definition at line 146 of file Learner.h. Referenced by makeDeepCopyFromShallowCopy(). |

|

|

average of the squared objective function(s) over the last test_every steps

Definition at line 147 of file Learner.h. Referenced by makeDeepCopyFromShallowCopy(). |

|

|

the step (usually epoch) at which validation cost was best

Definition at line 174 of file Learner.h. Referenced by measure(). |

|

|

This is set to true to indicate that MPI parallelization occured at the level of this learner possibly with data distributed across several nodes (in which case PLMPI::synchronized should be false) (this is initially false).

|

|

|

By default, MPI parallelization done at given level prevents further parallelization at lower levels. If true, this means "don't parallelize processing at this level" Definition at line 140 of file Learner.h. Referenced by test(). |

|

|

Definition at line 193 of file Learner.h. Referenced by measure(). |

|

|

maximum degradation in error from last best value

Definition at line 165 of file Learner.h. Referenced by measure(), oldread(), oldwrite(), and setEarlyStopping(). |

|

|

max. nb of steps beyond best found [in version >= 1]

Definition at line 170 of file Learner.h. Referenced by measure(), oldread(), oldwrite(), and setEarlyStopping(). |

|

|

minimum improvement in error otherwise we stop

Definition at line 167 of file Learner.h. Referenced by measure(), oldread(), oldwrite(), and setEarlyStopping(). |

|

|

minimum error beyond which we stop

Definition at line 166 of file Learner.h. Referenced by measure(), oldread(), oldwrite(), and setEarlyStopping(). |

|

|

Definition at line 180 of file Learner.h. Referenced by build_(), forget(), measure(), and setEarlyStopping(). |

|

|

temporary values relevant for early stopping

Definition at line 178 of file Learner.h. Referenced by build_(), forget(), measure(), and setEarlyStopping(). |

|

|

are max_degradation and min_improvement relative?

Definition at line 168 of file Learner.h. Referenced by measure(), oldread(), oldwrite(), and setEarlyStopping(). |

|

|

if yes, then return with saved "best" model

Definition at line 169 of file Learner.h. Referenced by measure(), oldread(), oldwrite(), and setEarlyStopping(). |

|

|

index of statistic (as returned by test) to use

Definition at line 164 of file Learner.h. Referenced by measure(), oldread(), oldwrite(), and setEarlyStopping(). |

|

|

index of test set (in test_sets) to use for early stopping

Definition at line 163 of file Learner.h. Referenced by measure(), oldread(), oldwrite(), and setEarlyStopping(). |

|

|

It's used as part of the model filename saved by calling save(), which measure() does if ??? incomplete ???

Definition at line 118 of file Learner.h. Referenced by epoch(), forget(), measure(), oldread(), and outputResultLineToFile(). |

|

|

the directory in which to save files related to this model (see setExperimentDirectory()) You may assume that it ends with a slash (setExperimentDirectory(...) ensures this).

Definition at line 115 of file Learner.h. Referenced by basename(), getExperimentDirectory(), measure(), oldread(), openTestResultsStreams(), openTrainObjectiveStream(), and setExperimentDirectory(). |

|

|

Definition at line 183 of file Learner.h. Referenced by basename(), load(), oldread(), oldwrite(), and save(). |

|

|

otherwise in MPI only CPU0 actually saves

Definition at line 72 of file Learner.cc. Referenced by save(). |

|

|

The data VMat's are assumed to be formed of inputsize().

Definition at line 133 of file Learner.h. Referenced by inputsize(), oldread(), and oldwrite(). |

|

|

Definition at line 191 of file Learner.h. Referenced by Learner(). |

|

|

array of measurers:

Definition at line 189 of file Learner.h. Referenced by appendMeasurer(), and measure(). |

|

|

test by blocks of this size using apply rather than use

Definition at line 150 of file Learner.h. Referenced by applyAndComputeCosts(), computeCosts(), Learner(), measure(), test(), and useAndCostOnTestVec(). |

|

|

The log stream to use to record the objective function during training.

|

|

|

DEPRECATED options in the construction of the model through setModel.

|

|

|

the use() method produces an output vector of size outputsize().

Definition at line 135 of file Learner.h. Referenced by oldread(), oldwrite(), and outputsize(). |

|

|

report test progress in vlog (see below) every that many iterations For each nth test sample, this will print a "Test sample #n" line in vlog (where n is the value in report_test_progress_every) Definition at line 156 of file Learner.h. Referenced by Learner(). |

|

|

save learner at each epoch?

Definition at line 172 of file Learner.h. Referenced by measure(), oldread(), and oldwrite(). |

|

|

Definition at line 173 of file Learner.h. Referenced by measure(). |

|

|

columns followed by targetsize() columns.

Definition at line 134 of file Learner.h. Referenced by oldread(), oldwrite(), and targetsize(). |

|

|

Definition at line 195 of file Learner.h. Referenced by computeCost(), costNames(), costsize(), makeDeepCopyFromShallowCopy(), oldread(), oldwrite(), and setTestCostFunctions(). |

|

|

Definition at line 145 of file Learner.h. Referenced by Learner(), measure(), oldread(), oldwrite(), and setTestDuringTrain(). |

|

|

opened streams where to save test results

Definition at line 80 of file Learner.h. Referenced by freeTestResultsStreams(), getTestResultsStream(), and openTestResultsStreams(). |

|

|

test sets to test on during train

Definition at line 149 of file Learner.h. Referenced by getTestDuringTrain(), measure(), openTestResultsStreams(), and setTestDuringTrain(). |

|

|

Definition at line 196 of file Learner.h. Referenced by computeTestStatistics(), makeDeepCopyFromShallowCopy(), oldread(), oldwrite(), setTestStatistics(), test(), and testResultsNames(). |

|

|

test during train specifications

Definition at line 144 of file Learner.h. Referenced by setTestDuringTrain(). |

|

|

Definition at line 62 of file Learner.cc. |

|

|

Definition at line 58 of file Learner.cc. Referenced by computeCostsFromOutputs(), and computeOutput(). |

|

|

Definition at line 61 of file Learner.cc. Referenced by computeCosts(). |

|

|

Definition at line 59 of file Learner.cc. Referenced by computeCostsFromOutputs(). |

|

|

Definition at line 60 of file Learner.cc. Referenced by computeCostsFromOutputs(). |

|

|

Definition at line 76 of file Learner.h. Referenced by useAndCostOnTestVec(). |

|

|

Definition at line 560 of file Learner.h. Referenced by getTrainCost(), and setTrainCost(). |

|

|

file stream where to save objecties and costs during training

Definition at line 79 of file Learner.h. Referenced by getTrainObjectiveStream(), openTrainObjectiveStream(), and ~Learner(). |

|

|

the current set being used for training

Definition at line 148 of file Learner.h. Referenced by basename(), getTrainingSet(), and setTrainingSet(). |

|

|

number of elements above which a file VMatrix rather

Definition at line 71 of file Learner.cc. Referenced by test(). |

|

|

**Next generation** learners allow inputs to be anything, not just Vec

|

|

|

The log stream to which all the verbose output from this learner should be sent.

Definition at line 207 of file Learner.h. Referenced by computeLeaveOneOutCosts(), Learner(), measure(), stop_if_wanted(), and test(). |

|

|

Definition at line 136 of file Learner.h. Referenced by weightsize(). |

1.3.7

1.3.7